The AI Recruitment Revolution

It’s 8:15 a.m. A recruiter opens her dashboard. Overnight, a swarm of digitalagents has already screened a large batch of applications and preparedinterviews before she even logs in. Her coffee is still hot, yet half her day’swork is done.

That small scene captures the quietupheaval inside talent acquisition. AI has not just made recruiting faster; ithas changed what recruiting feels like. Instead of sifting through noise,humans now orchestrate decisions from a cockpit of algorithms. It is bothempowering and unnerving.

This matters because the line betweenefficiency and empathy is thinning. Every automated judgment, every resumescored, and every candidate nudged by a chatbot reshapes how people experienceopportunity.

This article walks through thattransformation: how AI entered the hiring room, what it is actually doingtoday, where it fails, and what it might mean for recruiters, candidates, andthe labor market that binds them.

A Historical Lens: How AI EnteredRecruitment

Before the bots, there were spreadsheets and standardized tests. During WWI,the Army Alpha and Beta exams sorted soldiers by aptitude, our first massexperiment in algorithmic selection.

By the 1990s, the flood of digital resumespushed HR teams to adopt Applicant Tracking Systems (ATS), rule-based filtersthat searched for keywords. They promised order but often punished nuance; amissed synonym could bury a perfect candidate.

The 2000s brought machine learning and NLP.Systems began reading for context, not just words, recognizing that “projectlead” and “team manager” might describe similar skills.

In the 2010s, chatbots and video-interviewanalytics appeared. They conducted first-round screens and smiled throughlatency. Vendors like HireVue and Mya made AI seem personable, if occasionallyuncanny.

Now, in the 2020s, agentic AI is arriving.These are autonomous systems that plan, reason, and act with minimal humanoversight. They source, schedule, and follow up on their own. Recruiters havebecome air-traffic controllers, not just pilots.

The Mechanics of AI Recruiting

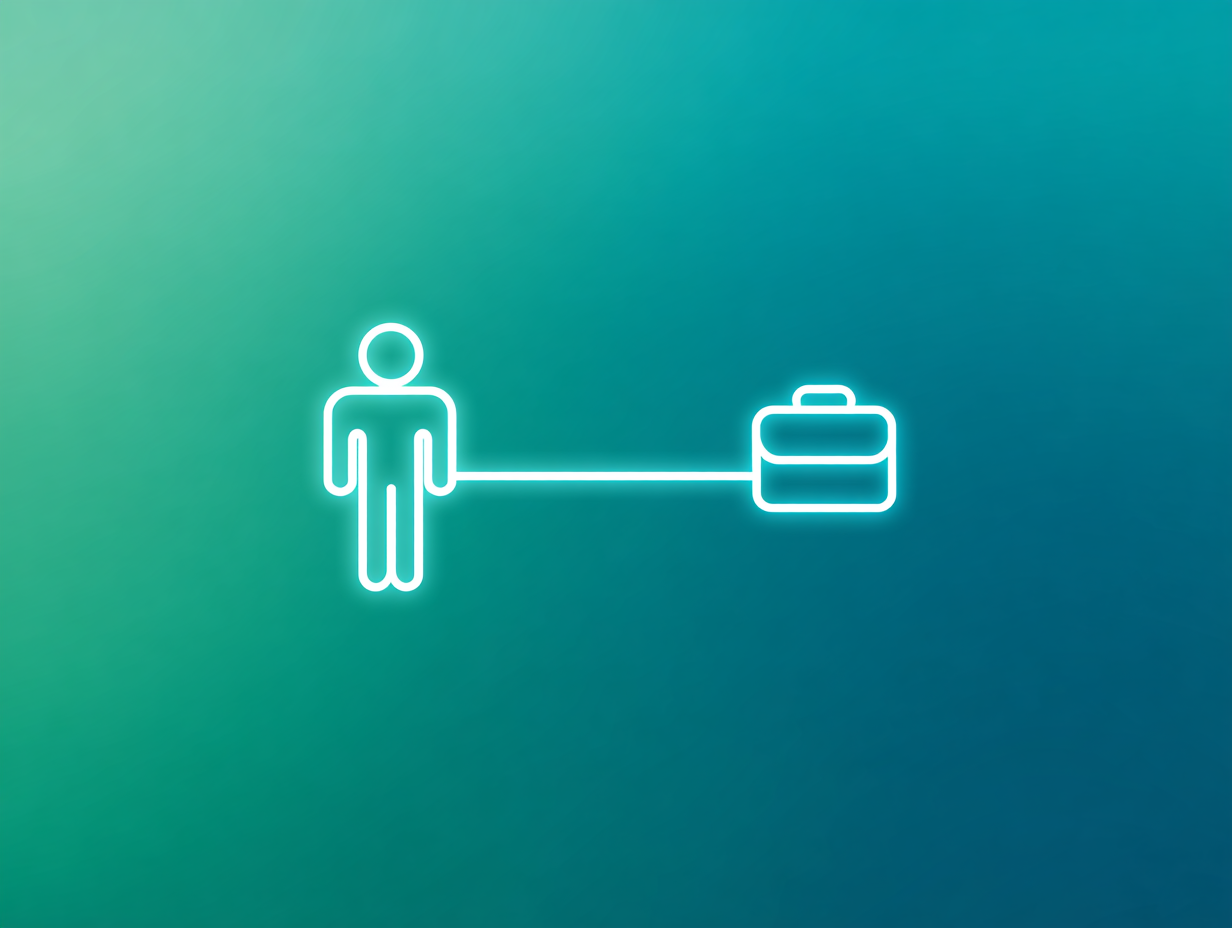

To understand what it is like to recruit with AI, follow the pipeline. If youare exploring adoption inside your own organization, here is a complete guideon how to implement AI in the hiring process.

- Sourcing

AI spiders scan millions of profiles, forums, and portfolios. It predicts which candidates might be “open to work” even before they update LinkedIn. The recruiter’s role shifts from hunting to curating. - Screening and Matching

Machine-learning models score candidates on skill fit, inferred competency, and potential performance. Some systems even simulate project scenarios, allowing AI to evaluate how someone thinks rather than what they have done. - Assessment and Interviewing

Chatbots conduct pre-screens using context-aware questions. Video platforms analyze speech patterns and problem-solving approaches. Newer systems use LLMs to generate custom questions from job descriptions and candidate histories. - Onboarding and Retention

Once a hire is made, AI guides orientation plans, training modules, and early feedback loops to predict attrition. - Agentic Autonomy

Agentic AI coordinates across systems. One agent sources talent, another validates credentials, and a third schedules interviews, all without a human prompt.

Recruiters describe this shift asliberating and disorienting at the same time. There are fewer manual tasks, butalso less intuitive grip on how a candidate moved from Stage A to Stage C.

Candidate Experience: Agency, Trustand Psychology

For candidates, AI recruiting often feels like sending resumes into a blackhole. They rarely know why they were rejected or what to improve. Researchshows that algorithmic rejection can feel more depersonalizing than a human“no.”

Transparency matters. When systems explaintheir decisions (“Your application was screened out because required Pythonexperience was below threshold X”), trust rises dramatically.

Agency matters too. The EUAI Act and New York City’s Local Law 144 now require disclosure andopt-outs. Still, most platforms remain opaque.

Candidate reactions to AI vary acrossregions, although research comparing cultural differences in AI-driven hiringis still limited. The same algorithm can evoke different emotions acrossgeographies.

Recruiting with AI forces companies todesign not only for accuracy but also for dignity. A candidate who understandsthe process, even if rejected, is more likely to trust the brand and reapply.

Bias, Fairness and Hidden Signals

Nomatter how neutral its math, AI learns from us, and our biases are data. Thatmeans even well-designed systems can quietly absorb and amplify human patterns. Amazon’sexperimental tool once downgraded resumes containing “women’s.” Another LLMrated identical Indian transcripts lower than UK ones for “hirability.”

Bias seeps through proxy signals such asfont choice, grammar, or university names, which often mirror socio-economicstatus. Even anonymizing names does not erase linguistic patterns that revealregion or class.

Fairness metrics attempt to quantifyjustice: statistical parity, equalized odds, predictive parity. Yet optimizingfor one often undermines another. Accuracy and fairness are in constanttrade-off.

Temporal drift adds risk. Models trained on“successful” hires from 2018 may still prefer Ivy Leaguers when today’s topperformers come from bootcamps. Without continuous retraining, AI turns into amuseum of old biases.

The lesson is simple: fair AI is not adestination; it is a maintenance plan.

Human + AI Collaboration: HybridModels

The best recruiting systems treat AI as a colleague rather than a replacement.AI handles volume and pattern; humans handle context and intuition.

Hybrid models are proving effective acrossindustries. For example, companies using AI pre-screening typically review thetop 20 to 30 percent of candidates while spot-checking lower-rankedapplications to catch overlooked talent. Studies show these approaches canincrease diversity hiring by 5 to 15 percent while reducing time-to-hire by 40to 60 percent. These results have been observed at firms such as Unilever andDeloitte using human-in-the-loop systems.

Human-in-the-loop designs also build trustinternally. When recruiters see AI as an advisor rather than an arbiter, theyfeel ownership over decisions again.

Still, hybrids are not bias proof. Studiesshow that humans often follow AI recommendations even when they disagree,anchoring on the machine’s confidence. Oversight requires training in critical scepticism,not blind faith.

Market, Economic and StructuralImpacts

AI recruiting does not just change how we hire; it reshapes the market itself.

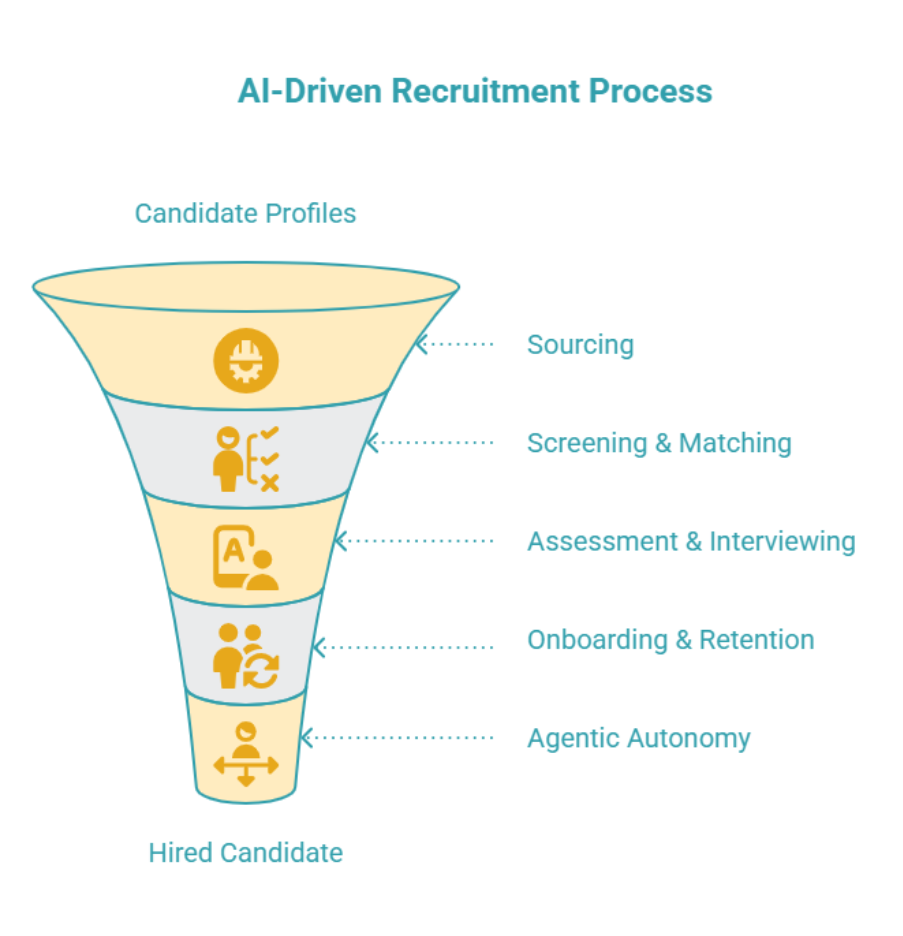

Large vendors such as LinkedIn, Workday,and HireVue control global pipelines. Their algorithms quietly define what“qualified” means. Smaller firms struggle to compete without access to the samedata and compute power.

Candidates face hidden costs as well. Theypurchase resume-optimization services, AI-friendly CV templates, and paid mockinterviews. Search equity is becoming a class privilege.

Labor-market effects ripple outward. Whenalgorithms reward linear careers, people sanitize their paths. Diversity ofbackground may shrink while conformity grows.

Regulation is catching up. EEOCguidelines, the EU AI Act, and state-level audit laws are early signs of acompliance era in which trust depends on transparency labels and third-partyaudits.

Security, Fraud and Trust

As AI becomes a gatekeeper, new forms of fraud emerge. Synthetic resumes, voicedeepfakes, and AI-generated interview videos have been reported in multiplemarkets. One Indian exam impersonator was caught by facial-recognition AI,which shows that automated systems can police certain risks.

Yet each safeguard adds surveillance.Facial scans and biometric verification raise privacy questions across borders.Who owns that data? How long is it stored?

Trust depends on explainability. Recruitersneed audit trails. Candidates need disclosure. Transparency is no longer aluxury; it is a compliance requirement and a brand asset.

Environmental and InfrastructureConsiderations

AI is energy and resource intensive. Global data centres powering AI systems,including recruitment tools, are projected to withdraw significant volumes ofwater annually by 2027. Recruitment-specific AI represents only a fraction ofthis total, but every screening and matching algorithm carries an environmentalfootprint.

There is a counterargument. Virtualinterviews reduce travel emissions, and automated matching reduces idle hoursand paper waste. The net balance depends on how sustainable the underlyingcloud infrastructure is.

Sustainable AI in HR will require carbonreporting, model efficiency benchmarks, and vendor disclosure of computeintensity. Efficiency without accountability becomes outsourced pollution.

Longitudinal Perspectives

We know AI hires faster, but we do not yet know if it hires better. Few studiestrack AI-recruited employees five or ten years out. Do they perform longer?Advance faster? Stay loyal? No one can say.

Without long-term data, organizationscannot prove ROI or fairness. Continuous feedback loops, feeding realperformance outcomes back into the model, represent the next frontier ofdecision intelligence.

Imagine a future in which AI predicts notonly who fits a role today but also who will thrive five years from now. Thatrequires ethics, data governance, and patience, which is a rare trio inrecruiting.

The Future: Generative and AgenticAI in Recruitment

Generative AI is already writing job descriptions, summarizing interviews, anddrafting offer letters. The next phase is agentic AI, a class of systemscapable of orchestrating hiring tasks with limited prompts.

Emerging AI systems can automate portionsof the hiring workflow, such as sourcing, outreach, and scheduling, but noend-to-end autonomous hiring pipeline exists at scale today.

This raises questions of autonomy andaccountability. Who is responsible if the AI makes a biased choice or violateslabor law, the vendor, the employer, or the algorithm itself?

Done well, agentic AI could free recruitersto focus on relationship-building and workforce strategy rather thanadministration. Done poorly, it could erode trust and turn hiring into a fullyalgorithmic transaction.

Caveats, Limitations and OpenQuestions

Despite the hype, AI recruiting research remains young. Many studies come fromvendors with vested interests or short timeframes. Publication bias favourssuccess stories over failures.

Ethical norms diverge by region. Fairnessin one context may appear as discrimination in another. And as candidates learnto “game the system,” the data shifts, which forces constant model refresh.

Perhaps the biggest gap is longitudinal. Westill do not know how AI decisions shape careers or markets over decades. Untilwe measure that, every claim about AI’s objectivity remains provisional.

Conclusion and Call to Reflection

Recruiting with AI is not only a technical shift; it is a psychological andethical recalibration. It changes how we see talent, how we assign worth, andhow we trust judgment made by machines on our behalf.

For recruiters, the job is no longer tofilter applications but to filter assumptions and to ask what their toolsoptimize for and whose future those optimizations serve.

For candidates, the challenge is agency,demanding visibility, fairness, and feedback in systems that increasinglydecide their fate.

For organizations, the imperative ishumility. AI is a mirror, not a miracle. It reflects our values at scale.

If hiring defines the kind of world webuild, then AI recruiting becomes the blueprint of that world. The question isnot whether machines can choose people well; it is whether we, as people, canchoose how the machines decide.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.