Why this matters now

If you’re responsible for talent acquisition in 2025, you’re steering between speed and fairness with a headwind. Budgets are under pressure, requisitions keep arriving, and candidate pipelines now span continents. Every week feels like swimming against a riptide of résumés, assessments, and follow-ups.

AI looks like a lifeboat. It can parse thousands of résumés, score video interviews, generate summaries, and route offers while your team sleeps. It promises objectivity and efficiency. But the same technology that buys you time can quietly cost you trust. When models train on messy history, they don’t predict the future; they replicate the past. That’s how a supposedly neutral filter ends up scaling yesterday’s inequities at algorithmic speed.

AI bias in hiring isn’t a glitch. It’s systemic. It lives in the data, in the design choices of developers, in the way humans interpret machine output, and in the shortcuts, organizations take under pressure. And now it sits under a harsh regulatory spotlight. New York City’s Local Law 144 mandates annual bias audits. California has adopted regulations under its privacy framework. The EU AI Act classifies employment AI as “high-risk.” Class actions—like Mobley v. Workday—signal that liability is real.

This article is written for enterprise HR leaders who need both velocity and values. We’ll trace how bias slips into systems, show you how to recognize it, and offer a playbook for preventing discrimination. Think of it as kitchen wisdom for a modern engine room: practical steps, clean mise en place, and the calm cadence of someone who has burned dishes and learned what not to do.

Read more: The Limitations of AI in Hiring: Why Human Judgment Still Matters

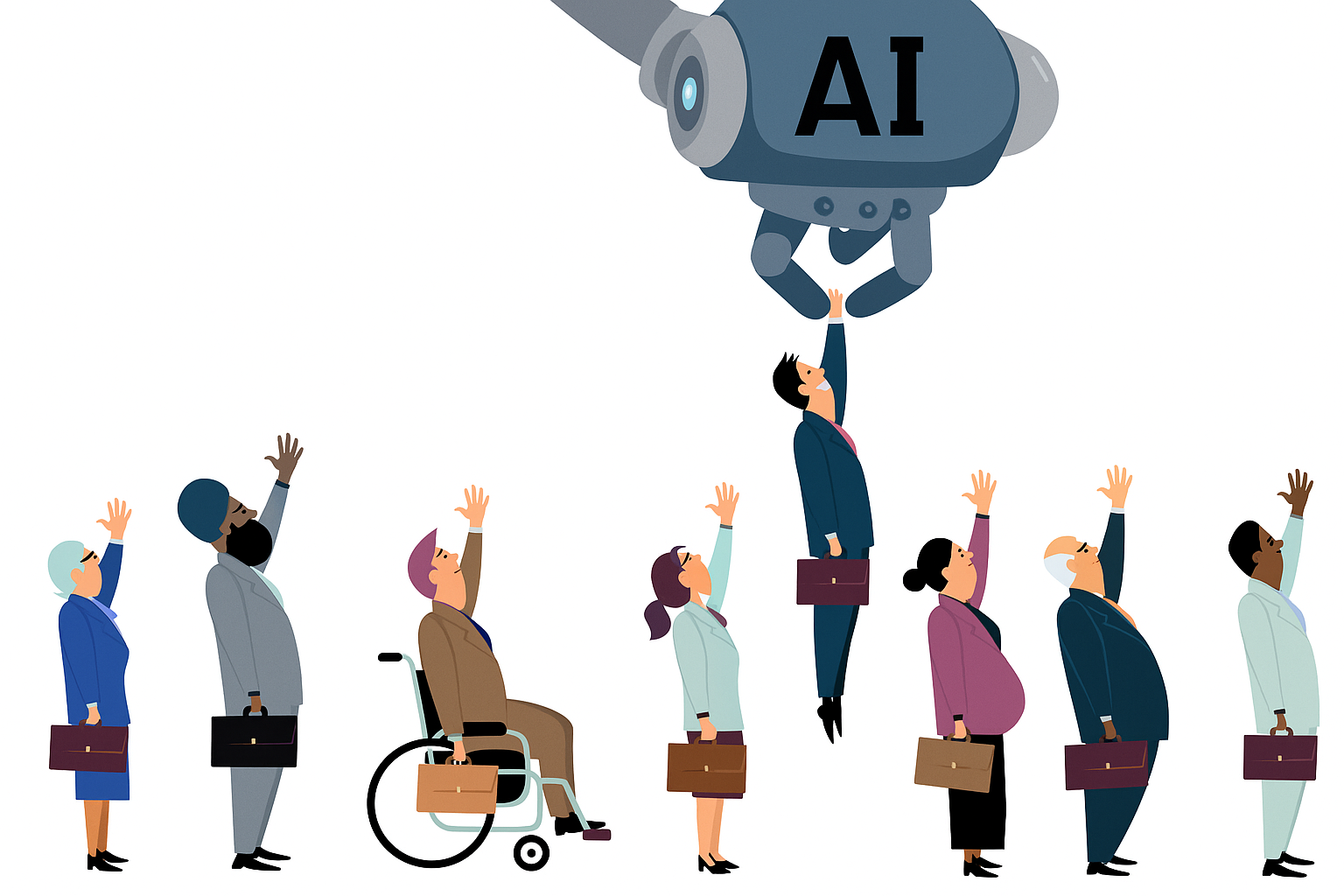

The foundations: the law that still governs the machine

The legal baseline remains unchanged. AI hiring discrimination doesn’t exempt organizations from laws that existed long before algorithms.

- Civil Rights Act of 1964 (Title VII): Landmark U.S. law that prohibits discrimination in employment based on race, color, religion, sex, or national origin. It set the baseline for all modern hiring standards.

- Griggs v. Duke Power Co. (1971): Supreme Court case that established the doctrine of disparate impact. Even neutral-looking practices are unlawful if they disproportionately harm protected groups and lack clear job relevance.

- Uniform Guidelines on Employee Selection Procedures (UGESP, 1978): Federal guidance that standardized validation practices and introduced the “four-fifths rule.” If the selection rate for any group is less than 80% of the most successful group, adverse impact is presumed.

AI doesn’t dissolve these obligations; it inherits them. If an automated screener reduces interview rates for women to 60% of men, the math is the same as it was in 1978. What’s new is scale and opacity. Neural networks trained on millions of examples bury their logic in hidden layers. You can’t “eyeball” fairness the way you might with a pencil-and-paper test.

The modern rules: the patchwork you must navigate

Fast-forward to today, and compliance comes from multiple directions.

- Illinois AI Video Interview Act (2019, amended 2022): Requires notice, consent, and data handling rules when AI analyzes video interviews.

- Maryland HB1202 (2020): Prohibits use of facial recognition during job interviews without explicit applicant consent.

- NYC Local Law 144 (2023): Requires annual independent bias audits for automated employment decision tools, publication of results, and candidate notices.

- California ADMT Regulations (2025): Expands transparency and opt-out rights for candidates under the CPRA.

- EU Artificial Intelligence Act (2024/1689): EU AI act Classifies employment AI as “high-risk,” mandating risk management, transparency, and human oversight.

- EEOC v. iTutorGroup (2023): Settlement where software automatically rejected older applicants, proving regulators will pursue biased AI.

- Mobley v. Workday (2025): A pending class action alleging systemic age, race, and disability discrimination by hiring algorithms.

The message is clear: compliance is no longer optional. Bias audits, candidate consent, and transparent reporting are now baseline expectations.

Where bias comes from: a practical map

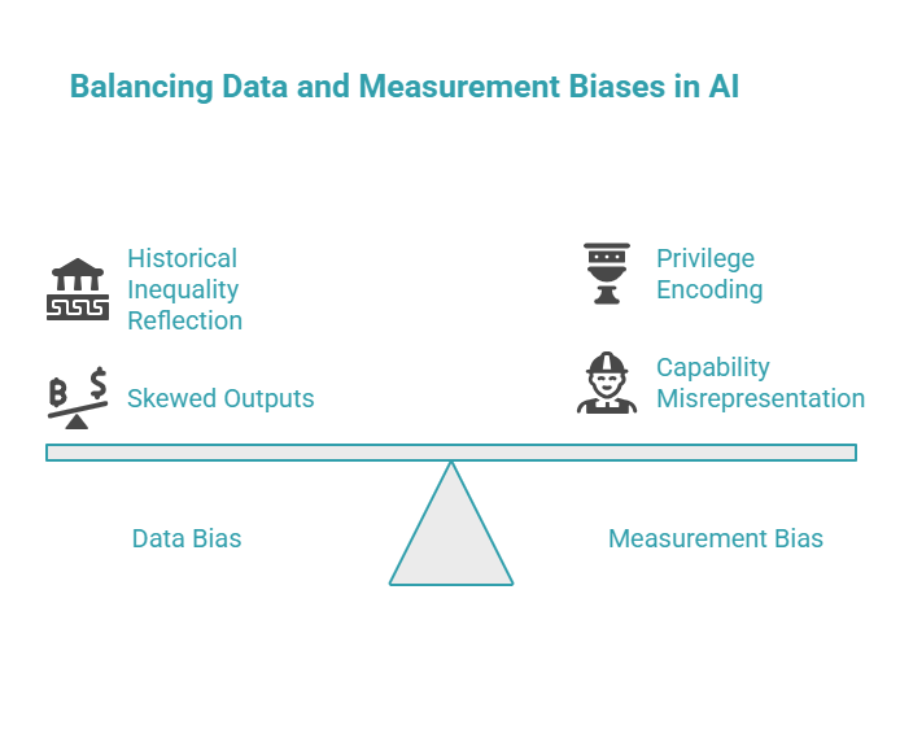

Bias isn’t a single bug; it’s a family of failure modes. AI bias audit processes reveal how data, metrics, and organizational culture intertwine.

- Data bias: Training data reflects history, and history reflects unequal opportunity. Résumé corpora may underrepresent women in engineering. Interview transcripts may over-index on one accent. Train on skewed inputs, and you’ll get skewed outputs.

- Measurement bias: You optimize what you measure. If “success” means “fast promotion,” you might encode privilege rather than capability.

- Instrumentation bias: Microphones that miss syllables, video codecs that blur faces, or ASR systems that stumble on dialects all feed distorted signals to downstream models.

- Organizational bias: Humans over-trust confident outputs. A score of 0.81 versus 0.79 looks definitive on a dashboard, even when both fall inside the same confidence interval.

Naming these sources is the first step. Once you see them, you can plan countermeasures.

Evidence that should change your mind

Studies have repeatedly shown AI hiring bias across systems. Evidence is abundant:

- PNAS study (2020): Automated speech recognition systems produced nearly twice as many errors for Black speakers as for white speakers.

- Bias in Bios (2019): Occupation prediction models reproduced gender stereotypes, downranking women in technical fields even when explicit gender markers were removed.

- University of Melbourne (2025): AI interview tools systematically misinterpreted speech from non-native English speakers and candidates with disabilities.

- University of Washington (2024): Resume-screening algorithms amplified societal stereotypes based on names and formatting.

- FAIRE Benchmark (2025): A standardized test found significant racial and gender disparities in candidate scoring across LLM-based screeners.

The lesson is simple: without intentional design, AI encodes the inequities of the past into the pipelines of the future.

Recognizing bias: audits that actually help

Three techniques should be standard in every enterprise TA toolkit:

- Adverse Impact Analysis (Four-Fifths Rule): Compute selection rates for each group and compare them to the highest group. Ratios under 0.80 warrant investigation.

- Structured Bias Audits: Use methodologies like those mandated in NYC, requiring impact ratios and documentation of features, oversight, and results.

- Counterfactual Tests: Run “what-if” experiments—swap names, standardize formatting, or alter accents while qualifications stay constant. If results swing, bias is embedded.

Think of these as MRIs for your hiring funnel—they expose what isn’t visible on the surface.

Agentic AI in recruiting: power with guardrails

We’re entering the age of agentic AI in recruiting—systems that don’t just execute tasks but orchestrate entire workflows. A hiring agent can source candidates, screen résumés, schedule interviews, and send reminders without being prompted.

Risks: Biases can scale faster. Candidates may distrust fully automated systems (“algorithm aversion”) if they feel humans have been removed entirely.

Opportunities: Properly designed, agentic AI can reduce bias by widening candidate pools, ensuring late applicants aren’t ignored, and freeing recruiters to focus on human-centric work.

The right model is “autonomy with oversight.” Tools like GoodTime and Phenom emphasize transparency, configurability, and human veto power.

(Read more → ATS vs Agentic AI: What’s Changing and Why It Matters)

Generative AI and the résumé frontier

Large language models now evaluate résumés, extract skills, and even generate hiring recommendations. But they introduce new risks:

- Resume bias: A 2024 audit found GPT-3.5 assigned higher scores to résumés with “white-sounding” names.

- Callback disparities: A 2025 study of 332,000 job postings showed male candidates receiving more callbacks for high-wage roles despite identical credentials.

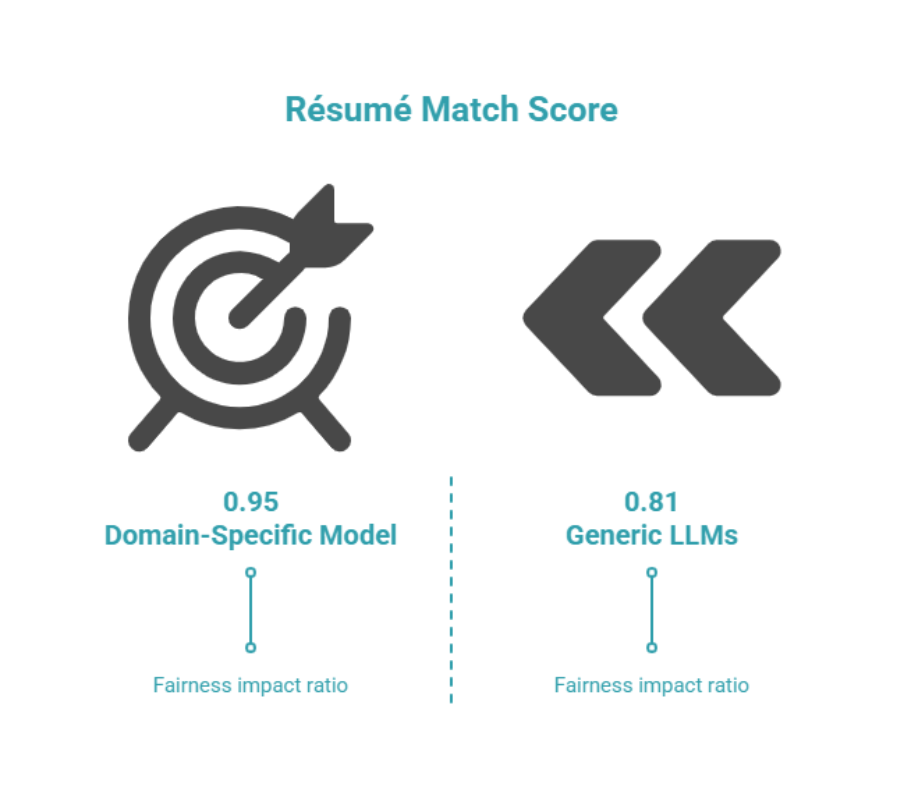

- Domain-specific vs generic models: A “Match Score” model achieved fairness impact ratios above 0.95, while generic LLMs fell below 0.81.

- Recursive bias: Emerging studies show AI systems starting to favor AI-generated résumés, sidelining human applicants.

The lesson: demand specialized models trained for fairness. Generic LLMs are not enterprise-grade hiring tools.

(Read more → AI Job Matching: How Modern Systems Understand Fit)

A practical playbook to prevent discrimination

Twelve steps to operationalize fairness:

- Governance spine: Cross-functional councils with TA, Legal, DEI, and Data Science.

- Define validity: Ensure every automated signal links to job-related constructs.

- Inventory data: Document sources, transformations, and known gaps.

- Monitor impact: Apply adverse impact analysis at every funnel stage.

- Prefer interpretable models: Use explainability techniques and ban demographic proxies.

- Counterfactual testing: Swap demographic markers to test robustness.

- Benchmarks & audits: Adopt FAIRE and commission independent reviews.

- Human in the loop: Require human judgment for critical decisions.

- Instrument agentic systems: Log inputs, outputs, and provide rollback.

- Communicate with candidates: Provide notices and opt-out paths.

- Train recruiters: Teach responsible interpretation of AI outputs.

- Close the loop: Investigate issues, remediate, and document fixes.

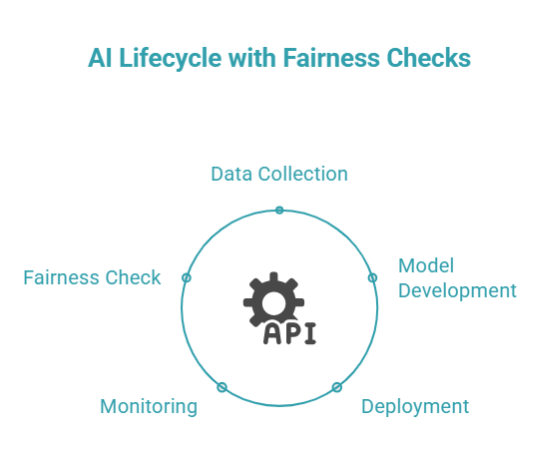

What good looks like: a lifecycle view

A fair AI hiring pipeline looks like this:

- Define → Collect → Validate → Screen → Interview → Decide → Monitor

At each stage: inventory data, confirm validity, run impact checks, and keep humans where judgment is consequential. Use the four fifths rule at every funnel stage and verify outcomes continuously.

This isn’t theory—it’s an operating model that scales.

Objections you’ll hear—and how to answer them

When stakeholders resist, point them to your AI ethics framework.

- “Our vendor is certified.” Certifications help but don’t replace monitoring outcomes in your context.

- “We don’t collect demographic data.” Then you can’t manage fairness. Use privacy-preserving estimation or third-party audits.

- “Humans reduce efficiency.” Place them where judgment matters; the cost of bias is higher.

- “Bias is inevitable.” Some imperfection is inevitable; unmanaged bias is not.

Culture: the part the model can’t fix

No amount of AI in HR tech can override culture. If leaders reward speed above all, shortcuts will win. If recruiters fear escalation, issues stay buried. Treat fairness like security: an agenda item, a budget line, and a leadership KPI. Celebrate teams that find flaws and fix them.

The road ahead

Agentic AI systems will dominate recruiting workflows. Regulations will tighten. Winners will be those who fuse efficiency with equity, who can show their math, and who build trust with candidates and employees.

The work is not exotic. It’s disciplined: inventory tools, define validity, monitor impact, test counterfactuals, maintain human oversight, and improve continuously.

Technical appendix: validity, calibration, and guardrails

- Validity types: Content (job tasks), criterion (performance correlation), construct (capability being measured).

- Calibration: Align predicted scores with actual outcomes. Poor calibration leads to overconfidence.

- Drift: Monitor input distributions and outputs for fairness degradation over time.

- Agentic guardrails: Separation of duties, human veto points, immutable logs, and rollback mechanisms.

- Generative AI hygiene: Standardize prompts, strip demographic signals, run red-team tests.

- Post-hire validation: Track performance, retention, and promotion outcomes by cohort to verify model predictions.

Quick glossary for busy leaders

- Adverse impact: Statistical signal that a group’s selection rate is materially lower.

- Calibration: Ensuring predicted probabilities match real outcomes.

- Construct validity: Proof that a tool measures what it claims.

- Agentic AI: Autonomous multi-step systems requiring oversight.

FAQs

Q1: What causes bias in AI hiring systems?

Bias originates from data imbalance, design assumptions, and human interpretation during model training—making AI bias in hiring a systemic challenge.

Q2: How can companies audit AI hiring tools for fairness?

They can apply AI audit tools and conduct adverse impact analyses to detect and correct skewed candidate outcomes.

Q3: What laws regulate AI-based recruitment in the US and EU?

The EU AI Act, NYC Local Law 144, and California AI regulations mandate bias audits, transparency, and human oversight.

Q4: What is the four-fifths rule in hiring compliance?

The four fifths rule flags potential discrimination if a group’s selection rate is less than 80% of the highest-performing group.

Q5: How does adverse impact analysis detect bias?

Adverse impact analysis compares selection ratios across groups to quantify and expose hidden inequities in automated decision-making.

Closing takeaway

Bias in AI hiring isn’t a technical footnote. It’s a leadership choice. Build systems that see people clearly, measure what matters, and govern with humility. Keep a human hand on the tiller. Done right, automation becomes an engine of inclusion rather than a megaphone for yesterday’s mistakes.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.