Why This Story Matters Now

Hiring decides the future of a company one person at a time. When it works, everything feels lighter: projects ship on time, culture improves, and customers stay. When it doesn’t, the whole machine grinds, and the fixes get expensive. Over a century, we’ve tried every tool imaginable to make hiring smarter — tests, interviews, job boards, software, and now AI. Some helped. Some hurt. All of it taught us something. Taken together, these shifts trace the evolution of the recruitment process from manual judgments to data-informed decisions.

Today, a new class of technology is stepping into the arena: agentic systems — software that doesn’t just analyze or recommend but acts. These systems can gather requirements, source candidates, schedule interviews, and keep a process moving without waiting for a human to click the next button. If legacy ATSs were filing cabinets with search bars, and “assistive AI” was a helpful junior analyst, agentic systems are the project manager who drives the whole workflow to completion.

This isn’t hype for hype’s sake. Talent teams are overwhelmed by volume, squeezed by compliance, and judged on candidate experience in a market that expects speed and transparency. Understanding how we got here — from manual craft to autonomous orchestration — gives you the map to lead your organization into the next chapter with eyes open and hands steady. Knowing where we are in the evolution of the recruitment process helps leaders separate signal from noise.

Goal Add-On (Educational Foundation): Throughout this piece, we explain emerging concepts in plain language, using analogies and real examples. Assume curiosity, not technical training.

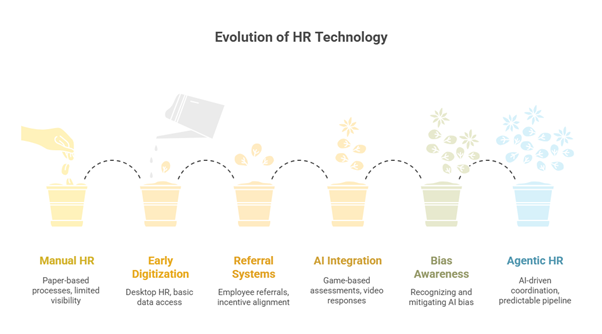

The Manual Era (1910s–1960s) — Craft, Judgment, and Paper

Long before dashboards and APIs, hiring was intimate and analog. Managers read paper résumés, called references by landline, and kept notes in manila folders. Decisions leaned on judgment shaped by experience and — let’s be honest — bias shaped by networks.

Two ideas from this era still matter:

- Scientific selection: Early industrial psychologists sold paper-and-pencil “mental ability” and “temperament” tests. Factories, railroads, and offices used them to predict safety, speed, and reliability. These weren’t perfect, but they planted a durable belief: hiring can be systematic, not just social.

- Assessment centers: Born in wartime intelligence, group exercises tested how people reason under stress and collaborate without clear authority. After WW2, corporations adapted them for management trainees. The method reinforced a second belief: hiring can be observed, not just inferred from a résumé.

The manual era was slow and inconsistent. It also reminded us that people aren’t data points. The strongest recruiters learned to see potential as well as pedigree — a lesson worth preserving as software accelerates everything else.

The First Digital Footsteps (1970s–1980s) — Databases, OCR, and Desktop HR

By the 1970s, big employers faced a paper flood. Early, proprietary applicant databases arrived. Clerks keyed candidate attributes into green-screen terminals so recruiters could print lists by skill, school, or location. Optical character recognition (OCR) experiments made paper résumés searchable. These were fragile, expensive systems, but they proved two things.

- Search beat stacks. Even a basic query is better than sifting paper by hand.

- Data structure matters. If the fields are wrong, the results are wrong, no matter how fast the computer is.

Then came a pivotal shift: client-server HRMS. In 1987, PeopleSoft delivered an HR system designed for desktop users, not just mainframe gurus. HR could run queries, update records, and produce reports without opening IT tickets. One oil major digitized vaults of paper and reduced HR admin headcount by double digits. This era showed that technology could create ROI in HR, not just recordkeeping comfort.

The Web Recruiting Boom (1994–early 2000s) — From Scarcity to Glut

The internet upended the funnel. Job boards made openings visible to anyone, anywhere. Corporate “Careers” pages went live. Referral portals scaled beyond the office grapevine. Recruiters got reach like never before — and a new problem: too many résumés.

The power balance shifted, too. Candidates could research companies, compare offers, and speak openly about experience. Employer brand became a strategic lever, not a side project. The lesson here is subtle: distribution improves access, but it doesn’t improve selection by itself. Volume without filters leads to noise. Noise without feedback leads to ghosting. And ghosting damages reputation.

SaaS ATS Becomes MissionControl (1996–2010) — Process at Scale

As volumes grew, AI recruitment software and cloud ATS platforms standardized workflows:

- Job creation and approvals

- Posting and syndication

- Screening and dispositioning

- Scheduling and communication

- Reporting and compliance logging

For large enterprises, adoption became near-universal. ATS platforms delivered control and consistency. Yet they mainly digitized what we already did. They made it faster to carry résumés from step to step — but they didn’t meaningfully improve who got through. Process scaled. Judgment did not.

Plain-language analogy: An ATS is a conveyor belt with scanners. It moves candidates along, logs every stop, and keeps auditors happy. If you load the belt with marginal applicants, you still get a neat log of marginal applicants.

Machine Learning & “Assistive AI” (2010–2019) — Faster, Smarter, Still Fragile

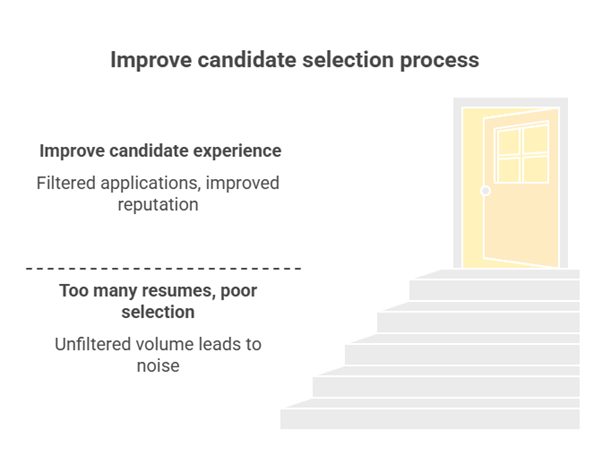

The next wave added algorithms. Profiles, keywords, and behavioral signals informed shortlists. Chatbots answered FAQs at midnight. Video interviews could be scored on speech and response patterns. Some results were dramatic: faster cycles, lower cost-per-hire, and better candidate engagement. This early wave of AI in talent acquisition sped up sourcing, screening, and candidate communications without changing ownership of decisions.

But the hard lessons cut deep:

- Bias in, bias out. Train on historical decisions, and you risk repeating historical discrimination at machine speed.

- Opacity breeds distrust. If recruiters and candidates cannot explain a score, they will not trust it — especially when outcomes feel unfair.

- Experience still matters. A well-trained chatbot that never escalates as promised is worse than a voicemail box. The tool must respect the human on the other side.

“Assistive AI” proved its value as a power tool. Like a nail gun, it speeds up work when the blueprint is sound. It also makes mistakes at scale if the blueprint is flawed.

The Current Tensions (2020–2024) — Volume, Velocity, and Vulnerability

Most enterprise funnels today look like a stack of overlapping layers:

- ATS at the core for compliance and workflow

- Sourcing tools and social platforms for reach

- Assessments and chat interfaces for engagement

- Analytics dashboards for leadership visibility

Three tensions dominate:

- Volume vs. precision. Teams face an avalanche of applicants and requisitions per recruiter. Quality signals get buried.

- Speed vs. fairness. Business pushes for faster fills. Regulators and candidates push for transparency and equitable treatment.

- Experience vs. cost. Better communication and feedback loops cost time or tooling. Budgets rarely expand to match expectations.

Teams try to balance efficiency gains from recruitment process automation with the human judgment candidates still expect.

Candidate sentiment reflects the cracks: long cycles, poor communication, and perceived black boxes. Internally, TA leaders wrestle with measurement that still overweights activity (reqs closed, days to fill) and underweights outcomes (quality, performance, retention).

(Read more → AI Job Matching: How Modern Systems Understand Fit)

What “Agentic” Actually Means — From Maps to GPS to Chauffeur

Let’s plain-speak about the shift. If traditional software is a paper map and assistive AI is GPS with real-time traffic, then agentic systems are a trusted chauffeur. You still choose the destination and constraints. The system navigates, reroutes, and refuels, and gets you there — while keeping you in the loop and asking for confirmation when decisions have material risk. In practice, agentic orchestration becomes end-to-end recruitment process automation with explicit human approval gates.

Agentic AI in hiring means the system can decide and act on the next best step in a workflow without waiting for a human to push it forward. Examples:

- After parsing a job intake, it drafts the posting, selects channels, and schedules a hiring-manager review.

- When applications arrive, it screens against must-haves, requests missing information, and books first-round slots on both sides’ calendars.

- If interviewers lag on feedback, it nudges them, flags risk to the recruiter, and proposes times to debrief.

- If a candidate drops, it backfills from a warm pipeline and reprioritizes outreach.

The key difference is autonomy within guardrails. The system is not just ranking; it is orchestrating.

Inside an Agentic Hiring System — Plain-Language Architecture

You don’t need a PhD to understand how these systems work. Five layers do most of the heavy lifting:

- Understanding layer: Converts messy inputs into structured intent. Think of it as the translator that turns “We need a backend dev who can wrangle payments” into a canonical profile with must-haves, nice-to-haves, and compliance flags.

- Reasoning layer: Chooses the next action. It weighs goals (fill by date X), rules (no screening without consent), and context (calendar availability, candidate responses), then picks what to do next.

- Action layer: Executes in connected tools — posting to boards, sending messages, updating stages, booking rooms, and generating summaries.

- Memory layer: Learns from outcomes. It notices which channels yield high-quality interviews, which questions stall candidates, and which interviewers are chronically late with feedback.

- Guardrail layer: Keeps everything safe and auditable — policies, checklists, human-in-the-loop approvals, and logs that show why the system did what it did.

If an ATS is a conveyor belt, an agentic system is the conductor making sure every instrument plays on time, in key, and with the right volume — while recording the performance for quality review.

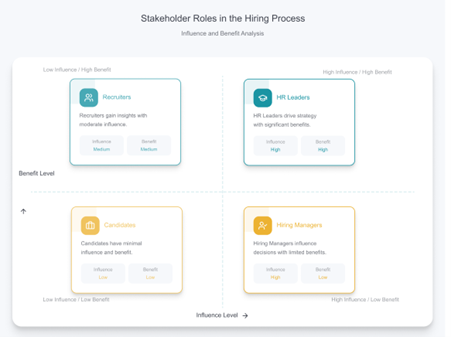

What Changes for Each Stakeholder

For Recruiters

- From task execution to talent strategy. Less scheduling and status chasing. More market mapping, hiring-manager coaching, and experience design.

- Fewer tabs, better context. The system surfaces what matters: risks, bottlenecks, and the next best action for high-impact reqs.

- New skills. Prompting, interpreting model behavior, and using data to drive decisions become part of the craft.

For Hiring Managers

- Cleaner intake. Agents interview managers, clarify must-haves, and produce a “role contract” everyone signs.

- Less friction. Calendar chaos fades. Decision meetings happen on time with concise, standardized candidate briefs.

- Greater accountability. The system highlights where manager delays stall outcomes.

For Candidates

- No more black holes. Every application gets a receipt. Every stage change triggers clear, plain-English communication.

- Faster decisions. Reqs close in days, not months, when orchestration removes waiting and rework.

- Fairer treatment. Consistent questions, consistent rubrics, and auditable decisions reduce inconsistency and bias.

For HR Leadership

- Outcome dashboards, not vanity metrics. Track quality-of-hire, time-to-productivity, diversity movement through stages, and cost per successful outcome.

- Governance by design. Logs, approvals, and explainability are built-in, not glued on at audit time.

Benchmarks, Signals, and “Agent-Washing”

Every new wave brings marketing fog. Two truths can coexist:

- True agentic systems shrink cycle time dramatically (often to single-digit days for common roles) and offload a meaningful share of coordination hours.

- Agent-washing is real. If a tool can’t act across systems, can’t remember context, and can’t explain its decisions, it’s just another rules engine with a fancy label.

Practical tests to separate signal from noise

- Can it propose and execute end-to-end tasks (intake → posting → screening → scheduling) with explicit human approval gates?

- Does it have memory (learns which channels, questions, and sequences work best)?

- Are there guardrails (role-based permissions, bias checks, audit trails, rollback)?

- Can it explain why it did something in language a recruiter and auditor both understand?

Regulation & Risk — Building Trustable Automation

Automation without accountability is a non-starter in enterprise TA, especially given ongoing concerns about AI bias in recruitment.

- Policy: What decisions may an agent make on its own? Where must a human approve? Which attributes are off-limits in screening?

- Process: How do we evidence fairness? How do we handle data subject requests? How do we pause, review, and correct the system when something looks off?

- Technology: Do we have logs, feature traceability, bias checks, and explanation tooling? Are consent and retention rules enforced by design?

A practical risk register for agentic hiring includes

- Bias and disparate impact in screening or ranking

- Data privacy violations in sourcing and communications

- Explainability gaps that undermine stakeholder trust

- Process drift, where agents optimize speed at the expense of quality

- Security and access across interconnected tools

Mitigation is straightforward if disciplined:

- Use representative training and evaluation data; retest regularly.

- Enforce human-in-the-loop at decision points that materially affect outcomes.

- Maintain model cards and change logs.

- Provide candidate-facing explanations in plain language.

- Run red-team exercises to find failure modes before they find you.

Sector Snapshots — How ContextShapes Adoption

Healthcare

Chronic shortages in nursing and allied health roles created urgency. Agentic systems pre-screen 24/7, manage license checks, and schedule multi-party shifts across facilities. Guardrails focus on privacy and credential validation. The result is shorter time-to-start and less recruiter burnout.

Financial Services

Banks and payment companies are enthusiastic yet cautious. Compliance and fairness audits drive strict human approvals on screens and final decisions. Early wins are in orchestration—automating documentation collection, background checks, and scheduling across secure environments.

Manufacturing & Supply Chain

High-volume plants and distribution networks lean on agents for hourly hiring. Conversational flows reduce applicant drop-off, while scheduling agents optimize shift coverage. Unions and fairness concerns require transparent scoring and accessible candidate explanations.

Case Studies, Told Straight

1) The PeopleSoft Pivot (Late 1980s)

After implementing one of the first client-server HRMS platforms, a major oil company digitized decades of paper records. Within 18 months, HR administrative headcount was reduced by double-digit percentages and business leaders gained self-service visibility into workforce data for the first time.

2) Cisco’s Referral Flywheel (1999)

Cisco launched one of the first enterprise-wide online referral portals in 1999. At its peak, employee referrals accounted for 25–30% of all external hires and delivered significantly lower cost-per-hire than agencies or job boards — saving the company millions of dollars annually in recruiting fees.

3) Unilever’s Early AI Bet (2017)

Unilever replaces résumé screens with game-based assessments and AI-scored video responses for early-career roles. Time-to-hire collapses, recruiter hours free up, and diversity improves at the final stage. The win came from redesigning the funnel, not just swapping in a model.

4) Amazon’s AI Lesson (Mid-2010s)

An internal ML tool trained on historical résumés down-ranks graduates from women’s colleges. The program is scrapped. The lesson is now canon: history encodes bias. If you teach an algorithm your past, it will give you your past. Only deliberate design and ongoing audits produce a better future.

5) Global Bank, Agentic Orchestration (2024)

Large Global Bank Agentic Orchestration Pilot (2024)A top-10 global bank piloted an agentic hiring system across 200+ concurrent mid-to-senior requisitions. The agent handled intake, interview scheduling, feedback nudges, and pipeline updates. Result: recruiter coordination time fell by more than 30% and pipeline predictability improved markedly. The biggest cultural shift wasn’t the time saved — it was knowing exactly where every requisition stood, every day.

A Practical Playbook — From Idea to Impact

Phase 0: Groundwork (4–6 weeks)

- Map the journey for 3–5 high-volume roles.

- Identify top bottlenecks (wait times, rework, stage leakage).

- Define “must-have vs. nice-to-have” capabilities for an agent.

- Align on guardrails and approval points with Legal and HRBP.

Phase 1: Pilot (6–12 weeks)

- Start with coordination-heavy tasks: intake, scheduling, reminders, and candidate updates.

- Keep human approvals for screens and offers.

- Measure: time-to-schedule, recruiter hours saved, candidate response times, and satisfaction.

Phase 2: Expand (1–2 quarters)

- Add sourcing automations with consent and opt-out.

- Introduce structured screenings with explainable rubrics.

- Begin portfolio-level optimization (which channels, which sequences, which messages).

Phase 3: Scale & Institutionalize

- Standardize agent playbooks across business units.

- Add self-serve hiring-manager workflows; the agent coaches and enforces standards.

- Integrate governance into quarterly business reviews: bias checks, exception logs, and corrective actions.

Success metrics that actually matter

- Time-to-first-interview and time-to-decision (role-level targets).

- Candidate throughput by stage with drop-off reasons.

- Quality-of-hire proxy: new-hire ramp, first-year retention, manager satisfaction.

- Diversity movement across stages (conversion, not just totals).

- Recruiter load (reqs per recruiter, hours per req, coordination minutes saved).

- Auditability (percentage of decisions with explanations logged).

Build vs. Buy — Choosing the Path That Fits

Buy when:

- You need results this quarter.

- Your data is reasonably clean, and your ATS connects well.

- You’d rather adopt a vendor’s guardrails than invent your own.

Build when:

- Hiring is strategic and unique enough to justify custom logic.

- You have platform engineering, data governance, and MLOps maturity.

- You want deep integration across internal systems (security, finance, and internal mobility).

Hybrid is common: buy core orchestration and extend with internal policies, prompts, and integrations that fit your culture and compliance regime.

Non-negotiables either way

- Clear RACI for agent actions vs. human approvals

- Model and prompt versioning, with rollback

- Sandbox and dark-launch capabilities to test safely

- Plain-language explanations for candidates and auditors

Modern vendors package orchestration, guardrails, and reporting so you can adopt AI recruitment software with lower change-management cost.

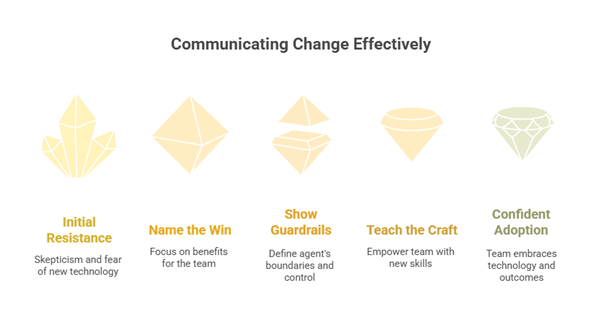

How to Communicate Change — Bring People With You

Technology fails without trust. Three communication moves make adoption stick:

- Name the win. “We’re giving you back 10 hours a week” lands better than “We’re implementing an AI agent.”

- Show the guardrails. Publish where the agent can act, where it must ask, and how recruiters can override. Confidence grows when people see the brakes.

- Teach the craft. Short workshops on prompting, rubric design, and interpreting agent outputs turn skepticism into ideas.

Give your team a simple mantra: “We own outcomes; the agent owns the chores.”

Candidate Experience — The Non-Negotiable Advantage

Agentic systems can end the two worst parts of today’s funnel: silence and ambiguity. A humane, modern experience looks like this:

- Immediate acknowledgement on applying, with a clear next step.

- Predictable timelines by stage. If something slips, an honest update.

- Feedback by design for finalists — short, respectful, and specific.

- Accessibility across devices and languages.

- Dignity in rejection. People remember how you say “not this time.”

This costs less than you think when tasks are automated. It also pays in brand equity and future applicant quality.

The KPI Shift — From Activity to Outcomes

Old dashboards counted movement. New dashboards must measure value:

- From “days to fill” → “days to ramp.” Not just speed to seat, but speed to contribution.

- From “reqs closed” → “successful outcomes.” Retention, performance signals, and manager ratings 90 days in.

- From “applications per posting” → “qualified interviews per week.” Volume is vanity; throughput of quality is sanity.

- From “top of funnel diversity” → “stage-by-stage equity.” Where do people drop? Why? What did we change?

Agentic systems make this feasible because they log the why behind each step. That traceability unlocks better decisions at the portfolio level.

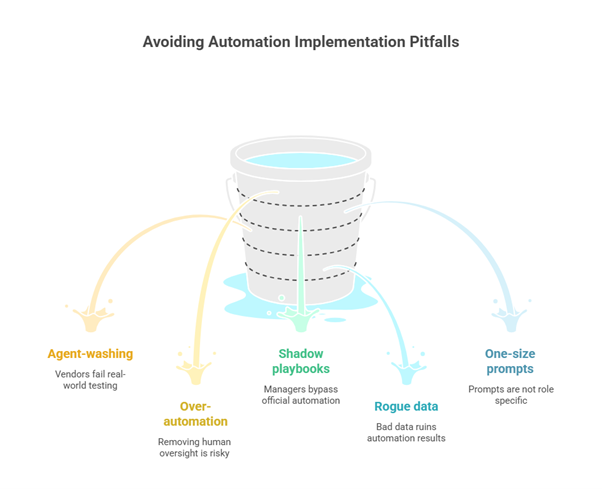

Common Pitfalls and How to Avoid Them

- Agent-washing. Ask vendors to demo end-to-end orchestration in your stack. No slides, no sandboxes — your tools, real data, supervised actions.

- Over-automation. Keep humans in approvals where stakes are high. Recruiters should never be surprised observers of their own process.

- Shadow playbooks. If managers bypass the system, figure out why. Fix friction or enforce policy.

- Rogue data. Audit what the agent reads and writes. Bad data upstream creates messy outcomes downstream.

- One-size prompts. Tune by role: family. Hourly hiring and principal engineering demand different flows and tones.

A Glimpse of 2030 — Three Scenarios

- The Orchestrated Enterprise

Agents handle routine actions across HR, finance, IT, and operations. TA becomes the steward of talent strategy, not the owner of logistics. HRBPs sit closer to the business, translating workforce needs into agent-driven programs. Compliance lives inside the workflow, not in a separate binder. - The Regulated Plateau

High-risk industries limit agent autonomy. Gains come from orchestration more than autonomous scoring. That’s fine. The win is predictable, auditable processes that treat candidates fairly and free humans from drudgery. - The Human Premium

As automation spreads, what stands out is human interaction done well — clarity, empathy, and trust. Organizations that combine fast, fair agentic processes with genuine human touch build durable employer brands and healthier teams.

Across all three, the most valuable TAskill is the same: designing systems where people and machines make each other better.

(Read more → The New Hiring Stack: AI Recruiting Tools Every Modern TA Team Needs)

FAQs

Q1: Will agentic systems replace recruiters?

No. They replace chores. Great recruiters get more time for the human work that actually improves outcomes — market sensing, stakeholder alignment, narrative, and judgment.

Q2: How do we control bias?

Measure it. Set policy on permitted features. Keep humans in approvals. Retrain and re-evaluate regularly. Publish summaries of what you track and fix.

Q3: Where do we start?

Pick roles with repetitive coordination and clear must-haves. Pilot on one business unit. Prove the win, then scale.

Q4: What if managers won’t use it?

Design for them. Shorten intake, automate scheduling, and send one-page candidate briefs. Pair convenience with accountability.

Q5: How do we justify the investment?

Time-to-decision, hours saved, improved show rates, and higher first-year retention. Add a dollar value to each. The business case writes itself.

The Evolution in One Page — A Handy Checklist

What changed across eras:

- Manual → Digital: from gut-feel and paper to searchable data

- Digital → SaaS: from local tools to standardized, scalable workflows

- SaaS → Assistive AI: from tracking activity to scoring signals

- Assistive → Agentic: from recommendations to responsible action

What stayed true:

- Hiring quality depends on clear definitions, consistent evaluation, and respect for candidates.

- Software amplifies both good design and bad habits.

- Governance is not a blocker; it’s how you go fast safely.

What to do next:

- Map the journey for three roles.

- Choose two friction points for the agent to own.

- Set guardrails and approval points.

- Pilot, measure, explain, repeat.

- Scale what works; sunset what doesn’t.

Conclusion: The Sharpest Knife, Used Wisely

Anthony Bourdain liked to say the knife doesn’t make the chef. It’s the hand on the handle — the judgment, the taste, the respect for ingredients — that turns steel into a meal. Hiring is the same. We’ve tried clipboards, mainframes, web boards, and machine learning. Each tightened a bolt. Each brought new risks. All of it pointed to the same truth: tools matter, but the way we use them matters more.

Agentic systems are the sharpest knife HR has ever held. They can cut through clutter, end the waiting, and treat candidates with dignity at speed. They can also do harm if left to chase numbers in a vacuum. The difference is leadership.

If you’re a talent acquisition leader, this is your moment. Define the guardrails. Choose the destinations. Let the agent drive the chores while you move your organization — calmly, deliberately — toward a future where hiring is faster, fairer, and unmistakably human.

Takeaway: Start small, design for trust, measure outcomes, and scale what works. The evolution has already happened. The question now is whether you’ll use it to build a better system — or let the old one keep deciding your future.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.