Why the AI in Hiring Process Needs a Blueprint Now

Recruiting has always been a balancing act between speed and judgment. Companies proudly claim that people are their most important asset, yet their hiring processes often resemble assembly lines. Resumes are scanned in bulk, interviews are scheduled and rescheduled, candidates are left without feedback, and recruiters burn out managing repetitive administrative work.

Technology was supposed to fix this. Applicant Tracking Systems digitized the filing cabinet. Workflow platforms digitized the checklist. These tools made processes faster and cleaner—but not necessarily smarter. They improved efficiency, but they didn’t improve effectiveness.

AI changes the stakes. We are no longer just digitizing tasks. We are delegating decisions. Systems don’t just help recruiters track who applied; they influence who gets an interview, who is shortlisted, and in many cases, who gets hired. That transition—from filing to deciding—makes the conversation about AI in hiring fundamentally different. Understanding the AI in hiring process means recognizing how decision-making itself evolves, not just the tools.

Adoption is already here. SHRM Labs estimates that 35–45% of companies are using AI somewhere in their recruitment process. A 2025 Steering Point survey found that 99% of hiring managers rely on AI tools today, and 95% intend to expand their investment. The question is no longer if AI belongs in hiring stacks—it is how to use AI in hiring effectively without backfiring.

Many implementations fail. Amazon scrapped its AI recruiter after it consistently penalized women. Candidates report frustration when they discover late in the process that a machine was making decisions. Regulators in New York, Brussels, and Washington classify hiring AI as high-risk and demand transparency. Recruiters often override AI suggestions, creating shadow processes that undermine trust.

This is why you need a blueprint: a structured approach that blends technology with governance, recruiter enablement, and candidate experience. Not hype, not a list of tools—but a practical roadmap for making AI in hiring both effective and sustainable.

From Punch Cards to Generative Agents: A Short History

Understanding how artificial intelligence in hiring evolved helps explain why governance and design matter so much today.

1970s–1990s: The Filing Era

The first Applicant Tracking Systems (ATS) were essentially digital filing cabinets. They logged names, tracked applications, and offered the earliest glimpse of digitization. By the late 1990s, keyword scanning became mainstream. Candidates were filtered by whether their resumes contained specific terms from job descriptions. This sped up recruiter workflows but created the infamous “black hole” where qualified applicants vanished without human review.

2000s–2010s: The Workflow Era

By the early 2000s, ATS adoption became standard in large firms. Systems evolved to manage interview pipelines, auto-emails, and scheduling. They organized the chaos but didn’t solve the central problem: identifying the best candidates.

The 2010s brought the first AI wave—the true start of the AI in recruitment process. Chatbots handled FAQs. Predictive analytics promised better shortlists. Automated video interview assessments emerged. Unilever, for example, rolled out HireVue’s AI video interviews from 2017–2019, saving 70,000 recruiter hours and £1 million (approximately $1.3 million) in recruiting costs annually during the initial global deployment. At the same time, risks became evident. Amazon abandoned its AI recruiter when it systematically downgraded women’s resumes, a textbook case of bias in training data.

2020s: The Agentic Era

Today, we are in the age of agentic AI—semi-autonomous systems that don’t just assist recruiters but act on their behalf. Generative AI writes job postings. AI scheduling agents book interviews. OECD reports highlight AI applications across the full funnel: sourcing, screening, engagement, interviewing, and predictive workforce planning. The Times described them as “downloadable recruiters.”

The shift is profound. Earlier systems tracked and organized. Now AI decides. That makes fairness, transparency, and accountability as critical as speed.

Why Implement AI in Your Hiring Stack?

AI’s value goes beyond saving time. Done well, using AI in recruitment reshapes outcomes across efficiency, quality, candidate experience, and strategy.

Efficiency and Speed

A March 2025 New York Times investigation found that candidates feel significantly more alienated and less trusting when AI screening tools are used but not disclosed until late in the process. Paradox case studies show that conversational AI can reduce recruiter workload by up to 60% in high-volume hiring environments, significantly lowering operational costs and accelerating frontline hiring.

Quality of Hire and Retention

Better hires are as important as faster hires. A 2024 peer-reviewed longitudinal study of 12 enterprise deployments found that employees hired through AI-augmented processes showed 22% higher retention over the first two years compared to traditional hiring methods. Quality of match improves when AI surfaces candidates based on skills and signals beyond keyword matches.

Candidate Experience

Poor experiences damage brands. Chatbots offer instant answers. Generative AI tailors communication. A 2025 Frontiers in Psychology meta-analysis found that disclosing AI use and explaining its role increases perceived procedural fairness by 20–30%, even when candidates are rejected. Candidates may be skeptical of algorithms, but they value honesty and responsiveness.

Strategic Workforce Planning

AI hiring isn’t just transactional—it feeds strategic intelligence. McKinsey highlights AI’s ability to forecast attrition and identify skills gaps. KPMG calls for “total workforce planning” that treats human and AI agents as complementary resources.

These outcomes highlight the core benefits of AI in recruitment, proving it’s as much a strategic advantage as an operational one.

The AI Hiring Stack Today: Where Tools Fit

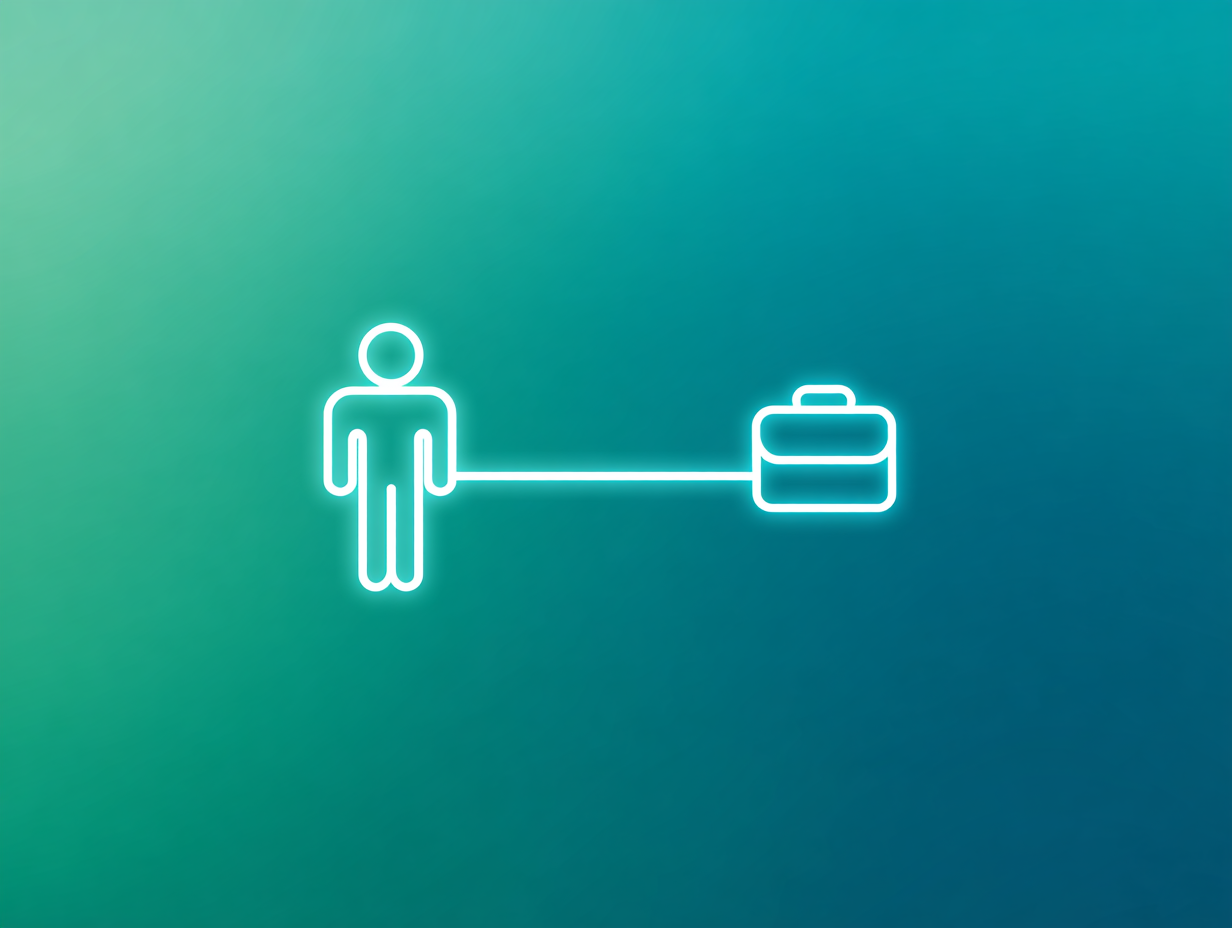

The modern hiring funnel is infused with AI at every stage:

Sourcing & Discovery: Rediscovery engines resurface past applicants. AI scrapes passive candidates from LinkedIn, GitHub, and niche forums.

Screening & Ranking: ATS parsers and LLM models score resumes. University of Washington research warns these systems can encode race and gender bias.

Engagement & Communication: Chatbots provide instant responses. GAN-based pilots even deliver real-time resume feedback.

Assessment & Interviewing: Video analysis tools score communication and soft skills. But Wired reported vendors abandoning facial analysis after fairness concerns.

Scheduling & Workflow Automation: AI schedulers coordinate interviews without human intervention.

Job Descriptions & Outreach: Generative AI drafts postings, flags biased language, and tailors outreach to candidates.

Predictive Analytics & Workforce Planning: AI forecasts attrition and succession risks, feeding executive workforce planning.

Challenges & Pitfalls: Where AI Goes Wrong

Every benefit has a shadow side. AI bias in hiring carries serious risks if not implemented carefully.

Bias Inheritance: Amazon’s AI penalized women. Melbourne researchers found 22% transcription error rates for accented speech. Generative AI audits show male candidates disproportionately favored—clear signals of AI discrimination in hiring when datasets and models aren’t controlled.

Over-Filtering: HBS/Accenture’s Hidden Workers study revealed millions excluded by rigid algorithms penalizing resume gaps or caregiving breaks.

Data Quality: Messy ATS data creates messy AI outputs. Algorithms magnify noise.

Integration Problems: Many legacy ATS lack APIs, isolating AI tools.

Recruiter Resistance: When recruiters don’t trust AI, they override it—nullifying adoption.

Candidate Discomfort: TIME (2025) reported candidates feel alienated when AI involvement is undisclosed.

Regulatory Risk: NYC mandates pre-use bias audits. The EU AI Act classifies HR AI as “high-risk.” The EEOC warns employers are liable for disparate impact.

Addressing AI bias in hiring requires transparent data, regular audits, and accountable oversight.

The Step-by-Step Blueprint for Implementation

Here is the roadmap to do AI right—turning aspiration into AI-driven recruitment that scales.

Step 1: Define Goals & Audit the Process

Identify bottlenecks—resume overload, candidate drop-offs, scheduling delays. Survey recruiters and map candidate journeys. Audit fairness: who gets excluded too early?

Step 2: Start Small with a Pilot

Choose one high-pain, low-risk area like scheduling. Run a 90-day pilot. Define success: faster cycle times, higher satisfaction, reduced bias.

Step 3: Select the Right Tools & Ensure Integration

Demand ATS compatibility and open APIs. Insist on explainability and vendor bias audits—critical for trustworthy AI-automated recruiting.

Step 4: Secure Stakeholder Buy-In & Align with Compliance

Draft an AI in Hiring Policy. Align with EEOC guidance, NYC Local Law 144, and EU AI Act. Involve Legal, DEI, and Compliance early.

Step 5: Train Recruiters as AI Supervisors

Recruiters evolve from resume readers to copilots. Train them in bias detection, dashboard interpretation, and ethical oversight.

Step 6: Integrate, Test & Shadow Run

Sandbox AI with historical data. Run in parallel with recruiters. Compare and calibrate.

Step 7: Deploy, Monitor & Iterate

Launch with real-time dashboards. Capture recruiter overrides (“why did you disagree with the AI?”). Feed those insights into retraining. Audit quarterly.

The Backbone Infrastructure

AI success rests on the plumbing behind the tools.

Data Architecture: Structured + unstructured data, embeddings, and vector databases underpin candidate matching. Retrieval pipelines (RAG) are becoming essential.

Interoperability: Without APIs, adoption stalls. Workday, Oracle, and Greenhouse must integrate smoothly—table stakes for any artificial intelligence recruitment software.

Bias Auditing Pipelines: Dashboards monitor demographic distributions in real time to detect AI bias in recruitment and guide remediation.

Privacy & Security: GDPR compliance, data minimization, and retention rules are critical for candidate trust, and must be native to enterprise-grade artificial intelligence recruitment software.

Human-AI Synergy: Redefining the Recruiter

AI will not replace recruiters—but recruiters who master AI will replace those who don’t.

The role is shifting:

- From resume sifting to supervising AI outputs.

- From scheduling logistics to engaging candidates.

- From process managers to bias watchdogs.

Skills needed now:

- Data literacy

- Bias awareness

- AI navigation

Recruiters who embrace these thrive. Those who don’t risk being sidelined.

Global & Cross-Cultural Dimensions

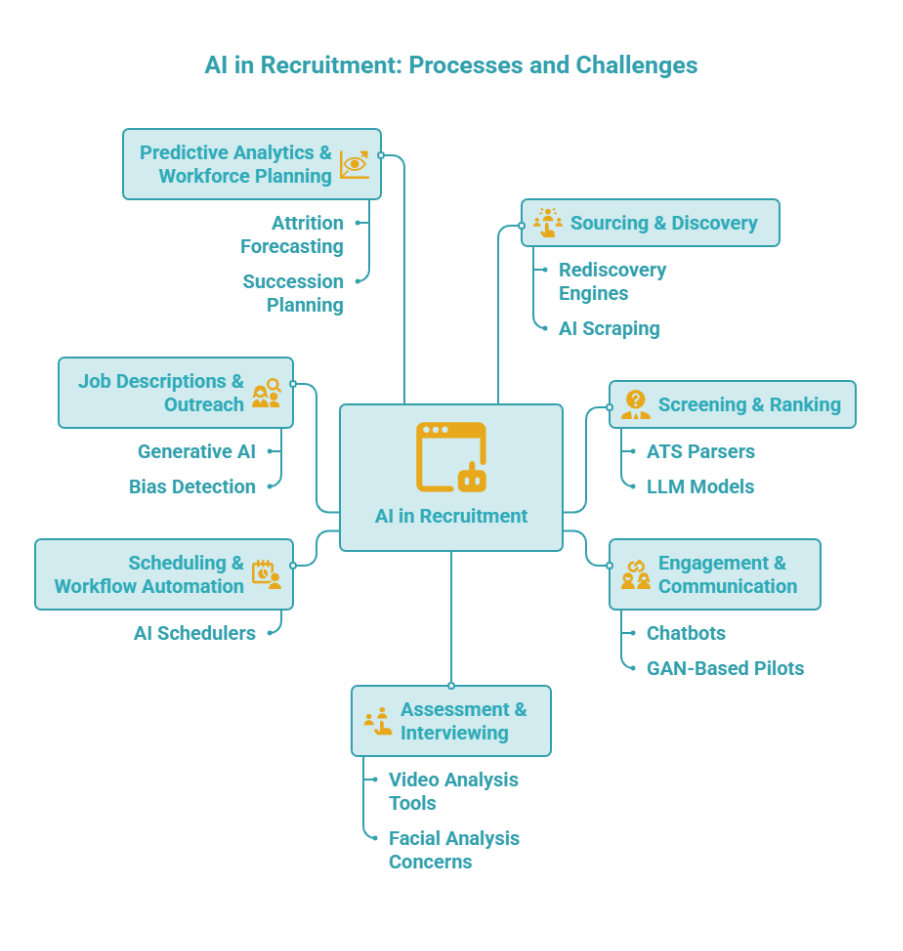

AI adoption looks different worldwide.

EU: Regulation-first. AI is “high-risk” under the EU AI Act. Compliance slows adoption.

Asia: Adoption-first. India and Singapore deploy quickly with regulation lagging.

US: Innovation-first. Tools spread fast, compliance frameworks chase after.

ROI Beyond Cost

AI’s true ROI lies beyond faster hiring.

- Retention: +15% in year one, +10% in year two.

- Diversity: Balanced shortlists improve fairness if audited.

- Workforce Intelligence: AI forecasts attrition and strengthens succession pipelines.

The Future: From Tools to Agents

Generative AI: Personalized job previews and onboarding journeys.

Agentic AI: Semi-autonomous recruiters sourcing, scheduling, and engaging.

Skills-first hiring: Degrees replaced by skill signals.

Global Standards: Certification frameworks to enforce responsible AI.

These AI hiring trends point toward truly AI-powered recruitment, where systems operate with more autonomy but remain governed by transparent human oversight.

FAQs

Q1: What is the role of AI in the hiring process?

AI automates sourcing, screening, and interview coordination, helping recruiters make data-driven decisions while improving fairness and speed—central to the modern ai in hiring process.

Q2: How to use AI in hiring effectively?

Start small with a pilot, ensure clean data, demand explainability, and keep humans in the loop—this is how to use AI in hiring responsibly.

Q3: Can AI reduce bias in recruitment?

Yes, if trained on representative data and audited continuously. Strong governance reduces ai bias in recruitment while preserving fairness.

Q4: What are the benefits of using AI in recruitment?

Faster time-to-hire, better matches, improved diversity, and richer workforce insights are core benefits of AI in recruitment.

Q5: Do AI-powered interview platforms improve candidate experience?

Used transparently, they speed scheduling, offer instant updates, and personalize interactions—hallmarks of ai powered recruitment that enhance candidate experience.

Conclusion: Building a Hiring Stack That Lasts

AI in hiring isn’t about buying shiny tools—it’s about reshaping strategy.

The blueprint is clear: Audit → Pilot → Integrate → Govern → Train → Monitor → Iterate.

Do it right, and AI becomes a partner—delivering faster cycles, stronger hires, and more inclusive processes. Do it wrong, and you inherit bias, alienate candidates, and invite regulators.

The future isn’t machines replacing people. It’s machines and people working together. Recruiters who master that balance will define tomorrow’s talent market.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.