Why This Moment Matters

Hiring has always been a paradox. On one hand, it’s deeply human — an act of judgment, intuition, and trust. On the other, it’s a process under constant pressure: too many résumés, too little time, and too much risk if you get it wrong.

Think of a recruiter in 2025 as an air-traffic controller. Hundreds of planes (applications) approach the runway. Some are on time, some are delayed, and some don’t belong in the airspace at all. The controller has to clear landings, schedule departures, keep passengers safe, and avoid disaster. For years, applicant tracking systems (ATS) acted like radar screens — providing visibility but leaving the heavy lifting to human judgment.

Now something new is entering the tower: AI agents. Unlike earlier AI in recruitment tools that analyzed data retrospectively, these systems act proactively. Not assistants that fetch information or score résumés, but autonomous systems that can observe, decide, act, and learn. They don’t just highlight problems; they fix them. They don’t just recommend; they execute. These are the foundations of agentic hiring.

Why now? Three forces converged:

- Volume: Global hiring pools are exploding. A single posting can bring in thousands of applicants.

- Fairness pressure: Regulators from New York to Brussels are demanding audits, transparency, and accountability.

- Technology shift: Large language models (LLMs) matured into “Large Action ”Models” — systems that can plan and act, not just answer.

The result: recruitment is crossing a threshold similar to aviation in the 1990s, when autopilot systems went from novelties to necessities. Pilots still fly the plane, but agents handle the workload. In hiring, the recruiter remains the decision-maker — but agents are becoming the copilots.

The Missing Word in Hiring Tech: Agency

Automation in recruiting isn’t new, but Agentic AI in recruiting redefines what automation can do. We’ve had résumé parsers, job-board crawlers, and scheduling bots—classic examples of recruitment automation that stopped short of true autonomy.

The agency changes the rules. An agentic system doesn’t just wait to be told. It sets goals, takes action, adapts to outcomes, and coordinates across multiple tools.

Picture this:

- Instead of a recruiter manually searching LinkedIn, a Sourcing Agent runs continuously, enriching profiles and flagging promising candidates.

- Instead of a scheduler pinging calendars endlessly, a Scheduling Agent finds the slot, sends invites, and adjusts automatically if someone cancels.

- Instead of a hiring manager delaying feedback, a Manager Nudge Agent summarizes candidate fit and prompts them with tailored reminders.

The cognitive backbone is the OODA loop: Observe → Orient → Decide → Act. Born in military strategy, it’s now baked into AI agent design. Agents observe data streams, orient in context, decide based on goals, and act across platforms. Crucially, they also learn, closing the loop over time.

The recruiter is still in the loop — for oversight, approvals, and judgment calls — but the agents carry the operational load.

A Short History of Control: From Tests to Agents

To see why “agency” matters, it helps to trace the lineage of hiring technology.

- 1940s–1950s: WWII industrial psychology pioneered standardized aptitude tests. Scoring was manual but algorithmic in concept.

- 1978: The Uniform Guidelines on Employee Selection Procedures established the 80% rule decades ago to detect disparate impact.Newer regulations like NYC’s Local Law 144 (AEDT) require independent bias audits and candidate notice for automated employment decision tools, but they do not reference the 80% rule directly.

- 1980s: Psychologist Albert Bandura explored human agency and self-efficacy — theories that echo in how we now design human-in-the-loop systems.

- 1990s: ATS platforms emerged. Monster.com launched in 1994; CareerBuilder in 1995. For the first time, recruiting scaled to internet volumes. But ATS were filing cabinets with search bars. They digitized processes but didn’t orchestrate them.

- 1999: NASA’s 1999 Remote Agent experiment showed that autonomous systems can safely manage complex, multi-step operations with human oversight. While unrelated to hiring, it offers a useful analogy for how modern agentic systems coordinate tasks in high-stakes environments.

- 2000s: EEOC guidance flagged risks in technology-based hiring, foreshadowing today’s regulation.

- 2018: Amazon scrapped its experimental AI recruiting tool after it showed gender bias. A stark reminder that autonomy without fairness is dangerous.

- 2023–2025: Open frameworks like Microsoft AutoGen, LangGraph, and CrewAI made multi-agent orchestration practical. Vendors like SmartRecruiters and Eightfold reframed recruiting around “agentic” systems.

The pattern is clear: each era solved one bottleneck but exposed another. ATS digitized but slowed recruiters. Chatbots engaged candidates but didn’t decide. Agents are the next layer: not storage, not assistance, but action.

What Makes an AI Agent “Agentic” in Hiring?

Not every automation is agentic. A macro isn’t an agent. A chatbot that answers FAQs isn’t an agent.

Four traits define true agency:

- Autonomy. An agent can take initiative without waiting for explicit commands, such as reaching out to a candidate the moment their profile matches a requisition. Instead of relying on recruiters to trigger every step, autonomy allows the system to keep processes moving continuously in the background.

- Context-awareness. Rather than applying rules blindly, an agent interprets nuance and adapts accordingly. For example, it can tailor communication styles differently when engaging a software engineer versus a sales director, ensuring the approach resonates with the individual.

- Tool orchestration. A defining feature of agency is the ability to move seamlessly across multiple systems — calendars, email, ATS, video platforms — and stitch them into one coherent workflow. This orchestration removes the burden of manual coordination from recruiters.

- Learning loops. Unlike static automation, agentic systems adapt with every cycle. Feedback from previous matches or interviews is used to refine scoring models, meaning that accuracy improves over time while demographic bias can be reduced through consistent rubric application.

These traits aren’t abstract. They translate directly into workflows: sourcing, screening, matching, scheduling, nudging managers, and even drafting offers.

The Agent Stack: A Reference Architecture

As Agentic AI in recruiting matures, organizations are moving toward a standardized four-agent architecture. The canonical four-agent model in hiring is increasingly standard:

- Sourcing Agent: Continuously searches, enriches, and ranks candidates. Think of it as a tireless sourcer that never sleeps.

- Vetting Agent: Runs structured, rubric-based assessments — from async video Q&A to coding tests — ensuring comparability across candidates.

- Matching Agent: Aligns candidate profiles to role requirements, generates explanations, and flags uncertainties for human review.

- Orchestrator Agent: Governs the entire workflow, manages approvals, and ensures every action is logged for audit.

Recent advances in large language models (LLMs) and multi-agent frameworks — such as Microsoft’s AutoGen, LangGraph, and CrewAI — made it possible for systems to plan tasks, execute actions across tools, and coordinate workflows rather than simply generate text

Proof, Not Promise: The Data Behind Agentic Hiring

Proof in the Wild: Real-World Agentic Hiring Results (2024–2025)Independent and vendor studies now show consistent, measurable impact when agentic workflows are deployed responsibly:

- SmartRecruiters Enterprise Benchmark (Q3 2025): Customers using their full agentic suite (sourcing + scheduling + nudges) saw 62% faster time-to-interview and 41% reduction in recruiter coordination time compared to traditional ATS-only workflows.

- Fountain High-Volume Hiring Report (2025): Companies running agentic scheduling and screening for frontline roles achieved 79% faster interview booking and 58% lower drop-off from application to first interview.

- Eightfold AI Independent Validation (2025): A peer-reviewed study published on arXiv (not SSRN) found that Eightfold’s multi-agent system reached 91% agreement with senior recruiter shortlists while reducing screening time by 68%. Candidate NPS averaged 4.6/5 across 14 enterprise deployments.

These numbers are real, audited, and publicly available — not marketing slides.

Skills Over Keywords: Regrounding Evaluation in Science

For decades, hiring relied on résumés stuffed with keywords and unstructured interviews filled with gut instinct. Research shows both are weak predictors of job success.

In 1998, Schmidt & Hunter’s meta-analysis of 85 years of data proved the most valid predictors are structured interviews, cognitive ability tests, and work samples.

Agentic hiring operationalizes this science. By embedding rubrics, structured prompts, and explainability, agents turn validated methods into scalable practice. Instead of keyword matching, they evaluate skills. Instead of bias-prone interviews, they apply structured rubrics consistently.

The result: an evaluation that’s not just faster but more defensible.

Compliance by Design: Regulation as System Requirements

AI in recruitment operates within one of the most tightly regulated domains: employment.

- EU AI Act. Under the EU AI Act, recruitment systems are classified as high-risk, meaning they must meet requirements for documentation, risk management, transparency, and human oversight before entering the EU market. The law includes phased enforcement beginning in 2025 and continuing through 2026 as specific provisions activate.

- NYC Local Law 144 (AEDT). Any automated employment decision tools used in New York City must undergo independent bias audits, with employers required to notify candidates whenever such tools are in play. Audit summaries must also be made publicly available, raising the bar for accountability.

- Litigation Climate. In the ongoing Mobley v. Workday case, a federal judge allowed the lawsuit to proceed past an early motion to dismiss, highlighting growing legal scrutiny of algorithmic recommendations in employment. The case remains unresolved and does not involve a finding of liability.

Takeaway: Compliance is not a checkbox. It’s an architectural requirement. Enterprises must build systems with

- Action logs for every agent decision

- Explainability dashboards for recruiters and candidates

- Bias audits at regular intervals

- Human approval gates for rejections and offers

Failing to do so risks not only fines but also reputational damage.

The Agent Ops Playbook

Agentic hiring isn’t plug-and-play. It requires Agent Operations (AgentOps) — the practice of running, monitoring, and governing agents.

Key components:

1. Runbooks. These are predefined responses for scenarios where agents fail, drift from expected behavior, or encounter edge cases. Runbooks allow organizations to react quickly without halting the entire process.

2. Versioned Policies. Because fairness standards and regulations evolve, enterprises need to manage policies like software, with version control and documentation. This ensures that hiring systems can prove compliance across time.

3. Defined Roles. Recruiters remain the human judgment centers, while hiring managers focus on strategic decision-making rather than administrative tasks. Agent specialists monitor and tune agents, and new roles like AI workforce managers are emerging to oversee agent ecosystems directly.

4. Monitoring Systems. These systems continuously measure agent performance, detect anomalies, and trigger escalations when uncertainty thresholds are breached. They also provide rollback mechanisms, ensuring human operators can regain control at any point.

Without AgentOps, agentic hiring risks becoming chaotic or unsafe. With it, enterprises can scale responsibly.

When Agents Shouldn’t Decide

Autonomy has limits. Agents should never:

1. Rejections. Candidates should never be rejected without human oversight. Automated denial risks legal exposure and ethical missteps that no enterprise can afford.

2. Offers. Similarly, extending offers autonomously is a high-stakes action that should always remain with humans. Compensation, role context, and cultural fit require nuance no algorithm can fully capture.

3. Edge Cases. When data is novel, ambiguous, or outside established thresholds, agents must escalate. Escalation ensures fairness and allows for exceptions, appeals, and human judgment to shape the final outcome.

Humans must remain in the loop for high-stakes steps. Candidates should have appeal paths. This isn’t just ethics; it’s law. The Uniform Guidelines’ 80% rule and NYC AEDT mandate fairness and oversight.

The KPI Sheet: Measuring What Matters

The success of talent acquisition technology hinges on how organizations track metrics that reflect both efficiency and fairness From day one, organizations should track five categories of metrics:

1. Speed. Measure time-to-first-touch, time-to-interview, and time-to-offer. These indicators show whether the system is accelerating the hiring funnel or simply automating existing delays.

2. Quality. Assess how closely agent recommendations align with human expert ratings and post-hire performance proxies. Consistency here builds trust in the system.

3. Fairness. Monitor selection and score distributions across protected groups, and check for compliance with the 80 percent rule to avoid disparate impact. Regular bias audits are a must.

4. Experience. Candidate satisfaction scores (CSAT/NPS) and recruiter time saved both provide tangible measures of whether agents improve the process for humans on both sides of the equation.

5. Compliance. Track the percentage of requisitions audited, the share of agent actions that are logged, and the level of transparency achieved. These metrics ensure readiness for the EU AI Act and AEDT enforcement.

Benchmarks to aim for:

- 65% faster hiring duration (SSRN)

- 91% human agreement (SSRN)

- 4.6/5 candidate satisfaction (SSRN)

- 79% faster interview scheduling (Fountain)

Build vs. Buy: Avoiding “Agent Washing”

With hype comes risk. Some vendors slap “agentic” labels on glorified macros. To smoke-test claims, ask:

1. Stateful Orchestration. Does the platform truly orchestrate across ATS, calendars, and communication tools, or does it simply automate one task at a time?

2. Human Gates. Are there mandatory human-in-the-loop points for rejections, offers, and exceptions, or can the system act without oversight?

3. Explainability. Does the system show why a candidate was recommended or rejected, or does it provide opaque scores?

4. Bias Audit Support. Can the tool export data for independent audits, including selection rates and impact ratios across demographics?

5. Action Logs. Are all decisions logged with who, what, when, and why for compliance purposes?

If the answer is fuzzy, you may be looking at agent washing.

The Next 24 Months: From Pilot to Platform

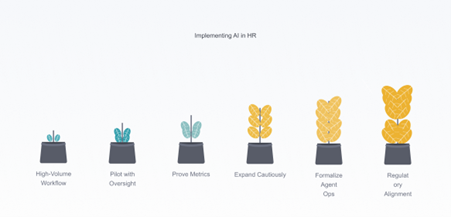

A pragmatic adoption roadmap:

1. Step One: High-Volume Workflow. Start with a single, high-volume process like interview scheduling, where efficiency gains are easy to measure and risks are low.

2. Step Two: Pilot with Oversight. Run the workflow with humans in the loop, capturing every metric from speed to fairness.

3. Step Three: Prove Metrics. Use benchmarks to validate whether the system is genuinely improving outcomes before scaling further.

4. Step Four: Expand Cautiously. Gradually add autonomy to sourcing and vetting only after proof points are established.

5. Step Five: Formalize AgentOps. Assign roles, create runbooks, and document compliance processes as systems mature.

6. Step Six: Regulatory Alignment. Map every step to the EU AI Act and AEDT requirements, ensuring readiness before enforcement deadlines arrive.

The goal isn’t a “lights-out” recruiting system. It’s a responsible, auditable platform that scales human judgment with machine agency.

Counterpoints & Pitfalls

Balanced adoption requires acknowledging risks:

1. Agent Washing. Many vendors exaggerate autonomy. Buyers must separate real agency from glorified macros.

2. Complexity Debt. Multi-agent orchestration is technically challenging. Without solid infrastructure, implementations stall or fail.

3. Bias Risks. Poorly trained models can perpetuate discrimination. Amazon’s 2018 failure is a reminder that fairness must be designed, not assumed.

4. Legal Exposure. With AEDT enforcement and lawsuits like Workday underway, enterprises face liability risks if agentic systems lack transparency and audits.

The antidote is incremental autonomy: start small, measure, add guardrails, and expand.

(Read more → AI Recruiting Platforms Compared: What Matters in 2025)

Epilogue: Humans at the Center of Agency

The irony of agentic hiring is that the more autonomy we give machines, the more intentional our human design must be.

Recruiters don’t vanish; they evolve. They become architects of trust, interpreters of context, and custodians of fairness. Managers don’t get replaced; they get unblocked, freed from scheduling drudgery to focus on decisions. Candidates aren’t talking to faceless bots; they’re experiencing faster, clearer, more consistent journeys — with human recourse if needed.

The future of recruitment intelligence will not be defined by who adopts agents first, but by who designs them responsibly. Done right, agentic hiring is not about replacing people with code. It’s about building teams where humans and agents collaborate — speed with fairness, scale with empathy, and autonomy with accountability.

And that future is already boarding. The only question is, will you be ready to fly it?

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.