Executive Overview

Recruiting has always been a tug-of-war between scale and judgment. The modern funnel brings torrents of applicants, but the winning decision still hinges on nuance: what a person can do next, not just what they did last. In 2025, AI recruiting platforms sit squarely in that tension. They promise reach and speed at the top of the funnel, pattern-finding in the middle, and fewer regrets at the offer stage. They also raise stakes: audits, consent, data risk, and how you explain a machine’s choice to a human who deserves an understandable answer.

The point of comparison isn’t “Who has the most features?” It’s “Which platform delivers trustworthy outcomes?” The leaders pair autonomy with governance, skills-first intelligence with clear explanations, and measurable ROI with a candidate experience that feels human.

From Punch Cards to Agentic Systems: The Long Road to 2025

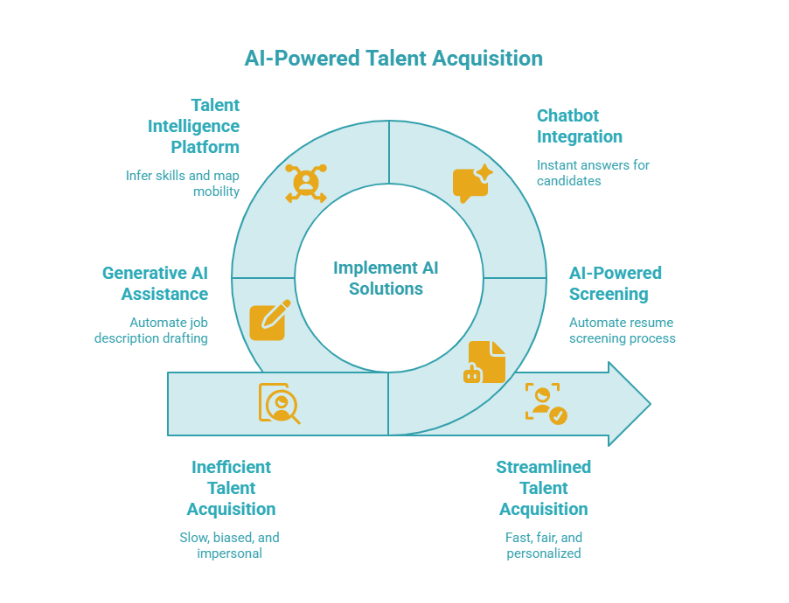

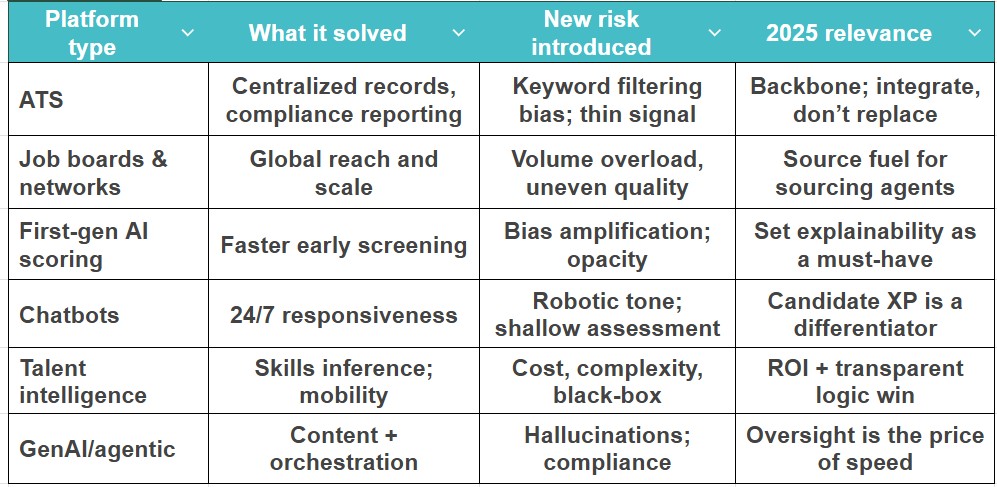

Technology in hiring has always evolved in waves. Each wave solved one bottleneck and created another — a rhythm that defines the journey toward AI driven recruitment.

Foundations (1900s–1990s): Science meets filing cabinets

Industrial–organizational psychology gave recruiting its first evidence base: cognitive tests, structured interviews, and work samples with measurable validity. In the 1960s, large employers began experimenting with computerized job banks. By the 1990s, Applicant Tracking Systems (ATS) such as Taleo and iCIMS codified the workflow: requisitions, parsing, compliance, and reporting. They centralized data but offered little intelligence. Keywords ruled; nuance suffered.

Job boards and networks (1990s–2000s): Reach without relevance

Monster.com and CareerBuilder democratized access; LinkedIn’s 2003 graph turned postings into relationships. The upside was global reach. The downside was the resume flood. Search filters couldn’t keep pace with volume, and strong signal drowned in noise.

First-gen AI (2010–2015): Efficiency, with a warning label

Machine learning crept into screening. HireVue’s video analytics and Pymetrics’ neuroscience games promised speed and consistency. Amazon’s internal resume scorer tried to learn what “good” looked like from past hiring. The lesson arrived early and hard: models trained on biased histories reproduce that bias at scale. Amazon scrapped its system; several vendors retreated from face analysis. Trust became the limiting reagent.

Chatbots and assistants (2015–2020): Instant answers at scale

As mobile messaging went mainstream, conversational tools like Paradox and Mya cut the lag between interest and interview. McDonald’s “McHire” showed what speed looks like in high-volume work: time-to-hire down roughly 65 percent, candidate satisfaction around 85.9 percent. Still, many interactions felt scripted. Speed without warmth left an aftertaste.

Talent intelligence (2017–2022): Patterns across the graph

Deep-learning platforms such as Eightfold and SeekOut inferred skills, suggested adjacent capabilities, and mapped internal mobility. Leaders uncovered “hidden gems” and quantified workforce pipelines. The trade-offs were complexity, cost, and explainability. If a black-box score says “hire,” who is accountable when it’s wrong?

Generative and agentic AI (2023–2025): From assistance to orchestration

Generative models draft job descriptions, personalize outreach, summarize interviews, and answer hard questions in plain language. Agentic systems stitch steps together: a sourcing agent compiles a slate, a screening agent runs assessments, a scheduling agent coordinates calendars, and a compliance agent tracks what the others do. LinkedIn embedded generative assistance into Recruiter; staffing giants piloted 24-hour, AI-led hiring for hourly roles. Autonomy rose—and so did scrutiny.

The story of AI recruiting platforms is a century-long march from paperwork to orchestration. Each chapter left behind a tool that tracked better than it reasoned. 2025 is when that balance begins to shift.

How Platforms Actually Work in 2025

Beneath branding, most AI recruiting software share a similar architecture. Understanding this pipeline helps HR and TA leaders ask sharper questions before they buy or integrate any system.

1) Data ingestion and normalization. Platforms pull resumes and profiles from your ATS, public networks, internal HRIS, and learning systems. They unify formats, deduplicate identities, and link records to skills taxonomies. Data provenance and consent flags matter here; tomorrow’s audit depends on today’s metadata. Interoperability with HR Open Standards, JSON Resume, and verifiable credential schemas reduces friction across tools and future proofs audits.

2) Parsing and skills inference. NLP extracts entities (titles, employers, dates, skills) and infers adjacent or emerging capabilities—what someone is likely able to learn based on trajectories similar candidates took. Good systems distinguish signals (outcomes, artifacts, endorsements) from decorations (buzzwords).

3) Predictive analytics. Models estimate match quality, likely ramp time, and even risk of attrition, sometimes incorporating team context. The strongest platforms expose features and confidence—not just a score—so humans can reason with the model rather than against it.

4) Generative assistance. Drafted JDs, tailored outreach, interview guides, and candidate summaries save hours per requisition. Guardrails are essential: templates, tone controls, and fact-checking against source data to prevent hallucinations.

5) Agentic orchestration. Multi-agent workflows automate sourcing → screening → scheduling → feedback. Each agent logs actions, cites sources, and requests approval when crossing policy thresholds (e.g., moving from screen to reject). Without these controls, autonomy becomes a liability.

(Read more → ATS vs Agentic AI: What’s Changing and Why It Matters)

Platform Types Compared: Value, Trade-offs, and Today’s Relevance

A meaningful comparison of AI recruitment software requires context. Every generation solved one problem and introduced a new one. The right choice depends on understanding those trade-offs.

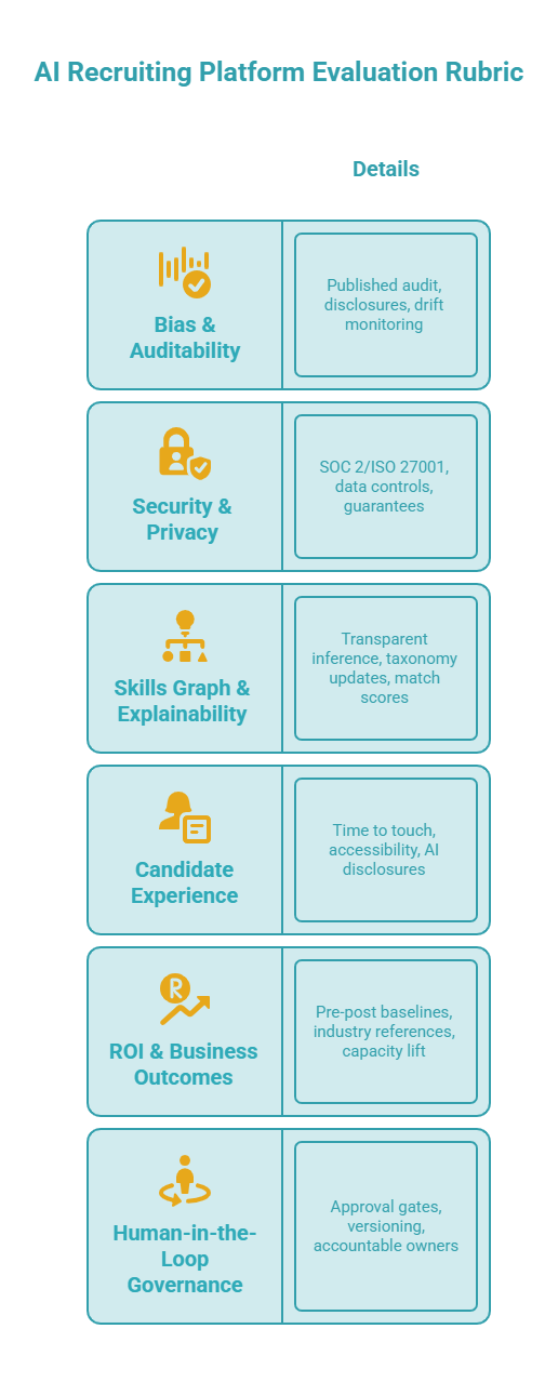

What Matters in 2025: The Six Non-Negotiables

Features impress buyers, but measurable outcomes determine adoption. The next phase of AI for talent acquisition depends on how responsibly platforms handle fairness, data, and governance. These six criteria separate durable systems from short-lived hype.

1) Bias, fairness, and explainability

Regulation turned ethics into operations. New York City’s automated employment tool law requires annual bias audits and candidate notice. EU rules classify hiring AI as “high-risk,” making documentation and transparency obligations explicit. U.S. agencies have tied algorithmic decisions to anti-discrimination statutes. In practical terms: insist on subgroup impact metrics, published methodology, and explanations aligned to decisions humans make. An AI bias audit should show which factors influenced the score and how small input changes alter results.

What to ask vendors

- Do you publish a 12-month bias audit with subgroup results? Who performed it, and how often is it repeated?

- Can a candidate request an alternative process or an explanation, and how is that routed to humans?

- Which fairness metrics do you monitor (e.g., selection rate ratios, calibration)? How do you act when drift appears?

2) Security and privacy

High-volume hiring systems concentrate PII, assessment artifacts, and chat transcripts—prime targets for attackers. A widely reported breach in high-volume retail hiring showed how quickly a candidate experience win can flip into reputational loss. Security posture is now a buying criterion, not a procurement footnote.

What to ask

- Which certifications are current (SOC 2, ISO 27001)? When were the last external pen-tests?

- What’s your retention policy for resumes, videos, and conversational data? Can we enforce our own?

- How do you segregate tenant data? Is any model training performed on our data by default?

3) Skills-first intelligence and graph quality

The industry is moving past degrees and pedigree. The best AI recruiting platforms evaluate skills and adjacent capabilities verified through portfolios or certifications. Ask for transparency on taxonomies, update cycles, and how the system justifies each inference.

What to ask

- Which skills taxonomy do you use, and how do you extend it for our domain?

- Can we see why you inferred a skill? Can we override it and keep an audit trail?

- How do you represent potential—e.g., time-to-competence estimates, learning signals, or adjacent-skill ladders?

4) Candidate experience with accountability

Speed matters; so does dignity. From accessible apply flows to plain-language disclosures about AI use, experience and trust now compound. Platforms that combine instant scheduling with transparent status updates win. Those that surprise candidates with AI interviews without consent lose twice: in sentiment and in risk.

What to ask

- What’s the median time from application to first human touch? How is that measured?

- Where and how do you disclose automated decision support? Can candidates opt out without penalty?

- Do you track candidate satisfaction and drop-off by step? Can we A/B test flows?

5) ROI that survives scrutiny

Surveys report large productivity gains—BCG’s 2025 work cites benefits in above 90 percent of adopters and >30 percent productivity gains in some programs—but executive sponsors need verified numbers in their context. That means baselining time-to-hire, cost-per-hire, recruiter load, and quality-of-hire before pilots—and publishing results afterward. Case studies that pair speed with diversity or retention improvements are stronger than cycle-time alone.

What to ask

- Show us three reference customers with similar requisitions and volumes. What improved beyond speed?

- Which metrics will we baseline before kickoff, and who signs off on them?

- How will you quantify recruiter capacity unlocked and reallocated (e.g., from screening to stakeholder coaching)?

6) Human-in-the-loop governance

Agentic systems make sequences of decisions. Without clear approval gates, logs, and rollback, small errors propagate. Treat the platform like critical infrastructure: change-managed, audited, and ultimately accountable to named humans.

What to ask

- Where in the workflow can humans override or annotate? Is that enforced by policy?

- Are prompts, parameters, and model versions logged and diffable? Can we export them for audit?

- How do you handle model updates mid-requisition? Do you freeze versions to keep outcomes explainable?

Case Studies: Four Moments That Redefined the Category

Amazon’s resume scorer (2014–2017). A stark lesson in training data. Learning from historical hiring patterns, the model learned historical bias. It penalized terms associated with women and was scrapped. Impact: fairness moved from blog-post virtue to board-level risk.

Unilever’s graduate hiring (2016–2019). A scaled proof point. Gamified assessments plus structured video screens compressed cycles by roughly 90 percent, saved about £1 million annually, and improved diversity by ~16 percent. Impact: when designed and measured well, AI can lift both speed and equity.

McDonald’s “McHire” experience (2019–2023, breach reported in 2025). A tale of two headlines. First, time-to-hire plummeted by around 65 percent and candidate satisfaction climbed to ~85.9 percent. Later, a security incident underscored that automation at scale magnifies both benefits and liabilities. Impact: security reviews entered the buying committee’s top five questions.

Randstad’s 24-hour hiring app (2025). An autonomy milestone. Applicants could move from interest to shift in a day. Impact: proved what agentic orchestration can do—raised the bar for oversight and for evaluating false positives at speed.

The pattern across these stories is simple: the upside is real, and so is the downside. Platforms that earned trust paired innovation with measurement, consent, and governance.

Compliance, Risk, and Trust: Turning Policy into Product Requirements

Trust in AI candidate screening depends on making policy a product feature. Governments have started codifying what ethical AI looks like, and enterprise buyers are embedding those rules into their RFPs.

- Bias audits and notice. Where required, employers must audit automated employment decision tools yearly and disclose their use to candidates. Product requirements: audit exports, subgroup metrics, disclosure templates, and alternate pathways for candidates who opt out.

- Consent for sensitive signals. Laws restricting AI-scored video interviews make explicit consent and data deletion controls non-negotiable. Product requirements: consent capture, granular retention settings, deletion SLAs, and legal-hold workflows.

- High-risk classification. In jurisdictions classifying recruiting AI as “high-risk,” providers and users must document intended use, data governance, and risk controls. Product requirements: model cards, evaluation reports, change logs, and alignment with frameworks like NIST’s AI Risk Management Framework or ISO/IEC 42001 for AI management systems.

- Title VII and disability guidance. U.S. agencies have linked algorithmic decisions to anti-discrimination statutes, including accommodations for people with disabilities. Product requirements: accessible experiences, alternative assessments, human escalation routes, and records of accommodation handling.

Trust is won in the boring details: the candidate receives a clear notice; the recruiter sees why the match looks strong; the compliance lead can export a year of decisions with the evidence that informed them.

A Practical 2025 Scorecard (Use This in Your RFP)

Comparing top AI recruiting tools is valuable only if those comparisons translate into measurable business outcomes. This scorecard and playbook help organizations move beyond pilot theatre toward responsible scaling.

- Bias & auditability (20%)

- Published, recent bias audit with subgroup results and methodology

- Candidate disclosures, opt-outs, and human review path

- Ongoing drift monitoring; retraining policy spelled out

- Security & privacy (20%)

- Current SOC 2/ISO 27001; independent pen-tests; breach history disclosed

- Data minimization and tenant isolation by default; clear retention controls

- Optional “no training on my data,” with contractual guarantees

- Skills graph & explainability (20%)

- Transparent skill inference with source citations and override workflow

- Regular taxonomy updates; domain tuning without vendor lock-in

- Match scores with feature contributions and confidence bands

- Candidate experience (15%)

- Median time to first human touch; accessibility compliance; localized UX

- Transparent AI disclosures; simple opt-out paths; satisfaction measurement

- Drop-off analytics by step; A/B testing support

- ROI & business outcomes (15%)

- Pre-post baselines for speed, cost, quality, and diversity

- References in your industry and requisition shape; measurable recruiter capacity lift

- Clear post-pilot report structure and ownership

- Human-in-the-loop governance (10%)

- Policy-enforced approval gates; versioning and rollback of models and prompts

- Exportable logs for audits; change-management tied to risk levels

- Named accountable owners for each automated step

Implementation Playbook: From Pilot to Platform

Comparisons are only useful if you turn them into outcomes. This playbook reduces pilot theater and accelerates learning.

Frame the problem. Pick requisitions where volume or variability strains your team (e.g., customer support cohorts, campus hiring, or niche technical roles). Define success beyond speed: quality, diversity, and recruiter time reallocation.

Baseline ruthlessly. Measure time-to-hire, cost-per-hire, satisfaction, and early performance before the pilot. Agree on how quality will be judged (e.g., ramp time, 90-day retention, manager satisfaction).

Design the guardrails. Map where agents act autonomously and where they request approval. Freeze model versions for the duration of the pilot. Turn on logging and bias monitors from day one.

Center the candidate. Publish a plain-language disclosure of AI use. Offer a human-led alternative without penalty. Survey candidates after each major step. A fast “no” delivered respectfully preserves brand equity.

Common Pitfalls (And How to Avoid Them)

Platform ≠ process. Buying a tool without redesigning steps just automates yesterday. Start with the outcome you want, then prune the steps that don’t serve it.

One-size fairness. A fairness metric that helps one subgroup can hurt another. Expect iteration. Publish your policy choices and stick to them until the data tells you to adjust.

The Future, Without the Hype: 2025–2030

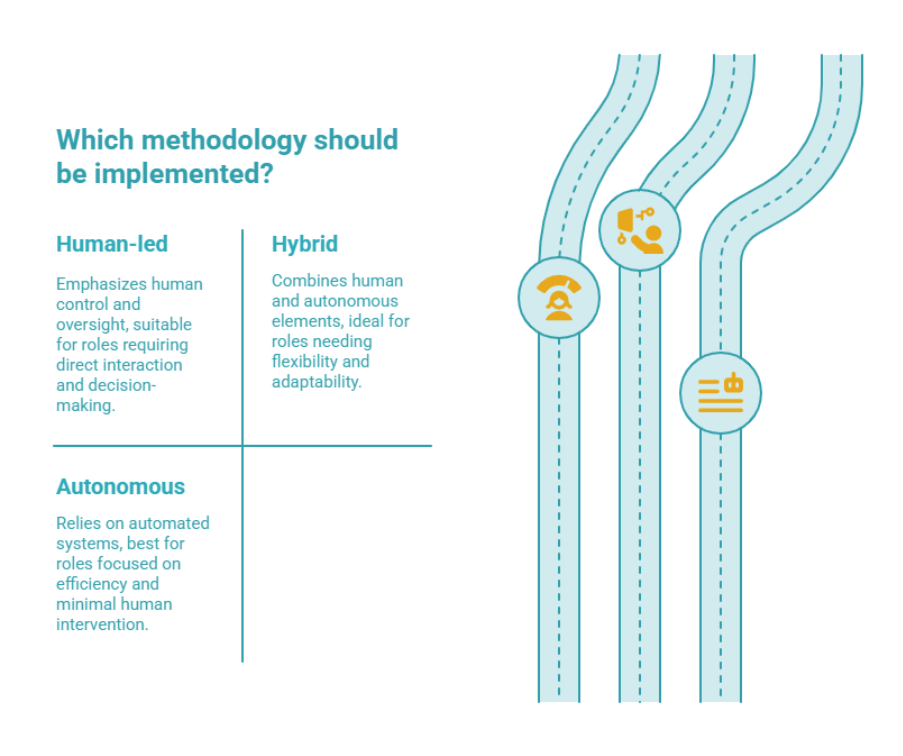

Three trajectories are plausible, and many stacks will blend them.

Human-led, AI-assisted. Copilots take the swivel-chair work, recruiters own judgment and relationship work. This model scales consultative recruiting and limits downside risk, but leaves some efficiency on the table.

Hybrid governance with narrow autonomy. Agents own bounded steps (e.g., outreach and scheduling) under policy. Oversight boards review audit logs and fairness reports quarterly. Most enterprises will land here first: measurable wins, controlled scope.

Autonomous hiring for high-volume roles. End-to-end automation expands in hourly and seasonal work where stakes and variance are lower, and where candidate expectations center on speed. The frontier challenge is catching false positives at scale without re-introducing friction that erases the efficiency gains.

Across all trajectories, three enabling shifts loom large: verifiable credentials (“skills passports” attached to artifacts), tighter interoperability (HR Open standards, portable profiles), and integrated workforce planning (recruiting signals feed headcount, churn forecasts, and internal mobility). The most resilient platforms will treat these as first-class primitives, not bolt-ons.

(Read more → The New Hiring Stack: AI Recruiting Tools Every Modern TA Team Needs)

FAQs

Q1: How are AI recruiting platforms different from ATS systems?

AI recruiting platforms go beyond record-keeping. They use intelligence to analyze, match, and engage candidates, while ATS systems primarily track applications and compliance data.

Q2: How do AI recruiting platforms work in 2025?

Modern platforms combine machine learning, generative AI, and agentic orchestration to automate sourcing, screening, and communication—within human-defined governance.

Q3: What matters most when comparing AI recruiting tools?

Focus on transparency, bias audits, explainability, and candidate experience rather than feature lists. The best tools prove fairness and ROI with data.

Q4: How can companies ensure fairness in AI hiring?

Implement ongoing bias audits, use diverse training data, and allow human review of automated decisions. Fairness must be continuously measured.

Q5: What’s the best way to implement AI recruiting software?

Start small with a pilot. Establish guardrails, measure baselines, involve compliance teams early, and iterate before scaling enterprise wide.

Conclusion: What “Good” Looks Like Now

The past two decades taught a hard truth: more technology does not mean better hiring. ATS systems organized paperwork but missed potential. Early AI sped up screening but amplified bias. Chatbots improved responsiveness but lacked empathy.

The new generation of AI recruiting platforms combines autonomy with accountability. They offer transparency in decision-making, verify skill evidence, and empower recruiters to focus on judgment rather than logistics. The best systems integrate fairness, security, and explainability as core design features—not afterthoughts

In 2025, a good AI recruiting platform is not a promise. It’s a pattern you can observe:

- Decisions accompanied by reasons a human can understand and contest.

- Speed that doesn’t collapse into sloppiness or security debt.

- Skills evidence that travels with the candidate and survives audit.

- Candidate experiences that respect time and agency.

- ROI that shows up where it counts: better slates, fewer regrets, stronger teams.

- And above all, humans who stay accountable for where the machine is confident and where it is not.

Ultimately, AI recruiting platforms should help teams do the human work better—coaching managers, assessing cultural fit, and telling the story behind a career shift. Technology is the infrastructure. Judgment remains the differentiator.

Takeaway for buyers:

Weight your scorecard to bias audits, security, skills graph quality, and governance. Make vendors prove candidate experience and ROI in your context. Treat agents like critical infrastructure. Then scale what works.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.