Why Tech Hiring Needs a Reset

Tech hiring sits at a breaking point. Demand for AI, cybersecurity, data, and cloud roles keeps growing, yet qualified people slip past attention, lost in overflowing pipelines and keyword screens. Recruiters move fast to hit SLA targets; hiring managers complain that the shortlists lack depth; candidates receive automated rejections that explain nothing. Everyone is busy, but the system delivers noise. This is why rethinking tech hiring with a skills-first lens has moved from idea to necessity.

The root cause is structural. Degrees still gatekeep even when they no longer predict how someone will perform in modern, team-based, tool-rich environments. Résumés are frozen snapshots of the past, not living signals of current capability. Keyword filters reward buzzwords and punish adjacent experience. Unstructured interviews elevate charm over craft. The result is slow time-to-slate, mismatched hires, and stagnant diversity.

Artificial intelligence offers a rebuild rather than a patch. By modeling skills as connected networks, AI can read context, detect adjacencies, and surface potential—spotting the platform engineer who can cross the cloud boundary, the analyst who can grow into machine learning ops, the QA lead who can run reliability programs. Used well, AI moves organizations from pedigree to proficiency, from guesswork to evidence. Used carelessly, it scales historical bias. This article lays out the practical path to do it right—grounded, measurable, and humane.

(Read more → Rethinking tech hiring)

From Gut Instinct to Graphs: A Short History of Hiring

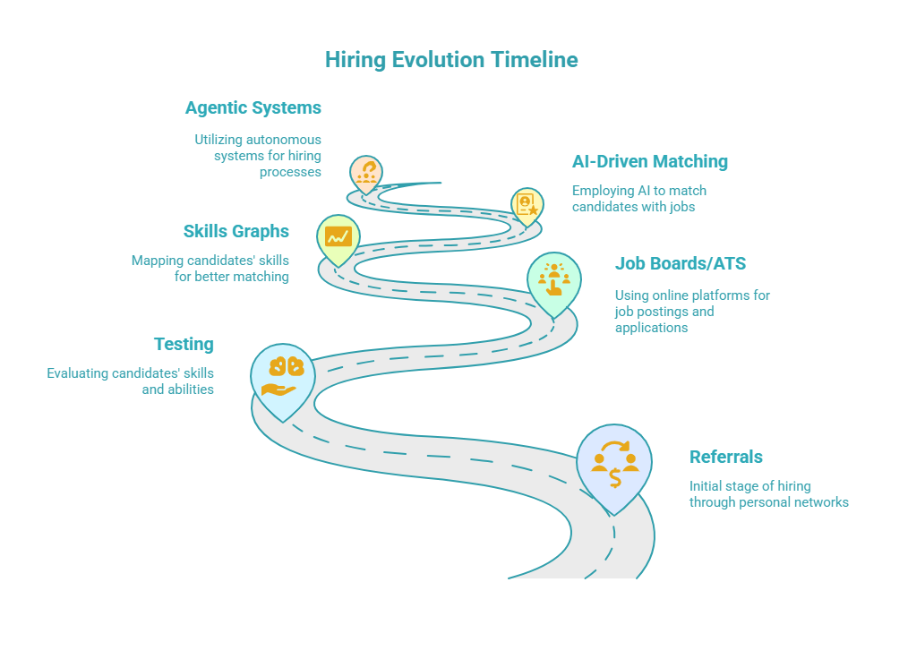

In the early decades of industrial growth, hiring was hyper-local. People found roles through referrals, neighborhood ties, and union halls. If a foreman trusted your reference, you got a tryout. If you lacked the network, you were invisible. It was efficient for insiders and exclusionary for everyone else.

Mid-century, large employers turned to standardized tests to add rigor. Aptitude batteries and personality inventories promised objectivity at scale. They reduced some randomness but introduced their own bias, privileging narrow expressions of intelligence and penalizing cultural difference. Testing became a gate that looked scientific yet often missed real-world skill. This period seeded the public skills taxonomy efforts that came later.

The internet blew the gates off. Job boards delivered volume at a scale recruiters had never seen. Applicant tracking systems emerged to impose order, and the keyword era began. Context evaporated. Candidates learned to pack résumés with the right nouns; great talent without the exact phrasing fell through the cracks. Speed increased; signal fell.

During the 2010s, the market started shifting to skills as data. Public taxonomies like O*NET and ESCO cataloged thousands of competencies. Platforms mapped professional journeys and built skill graphs showing which capabilities travel together. The idea took root: work isn’t just titles and tenures—it’s a network of skills that evolve.

Modern AI sits on top of those maps. It doesn’t simply count words; it models meaning. It recognizes that Kubernetes and ECS cluster around cloud orchestration, that Kafka sits near event streaming, that incident management lives next to observability. It learns that a back-end engineer who scaled APIs probably understands caching, queuing, and failure modes—even if the résumé never uses the exact same labels.

Why a Skills-First Model Now Beats Credential-First

Credentials once acted as shorthand for readiness. A degree signaled persistence and baseline rigor; a brand-name employer signaled screening. But credentials age, while skills compound. In fast-moving domains, the half-life of a tool can be shorter than a degree program. Meanwhile, talent grows in non-linear paths—bootcamps, open-source contributions, internal rotations, and hands-on projects that rarely fit tidy checkboxes. A mature skills-based hiring approach treats capability as the primary truth.

A skills-first model treats capability as the primary truth. It asks: What can this person do today? What adjacent skills can they pick up quickly? Which outcomes have they delivered in contexts that look like ours? This reframing broadens the pool, surfaces hidden workers, and improves prediction. It also reduces pedigree bias, which quietly favors the already advantaged.

For executives, the trade-offs are pragmatic. Skills-first hiring shortens time-to-productivity because the match is closer to the work. It increases retention because people see clear mobility paths. It improves resilience because teams are assembled around fundamentals—like observability, testing depth, data modeling, and secure design—rather than around a brittle list of vendor nouns.

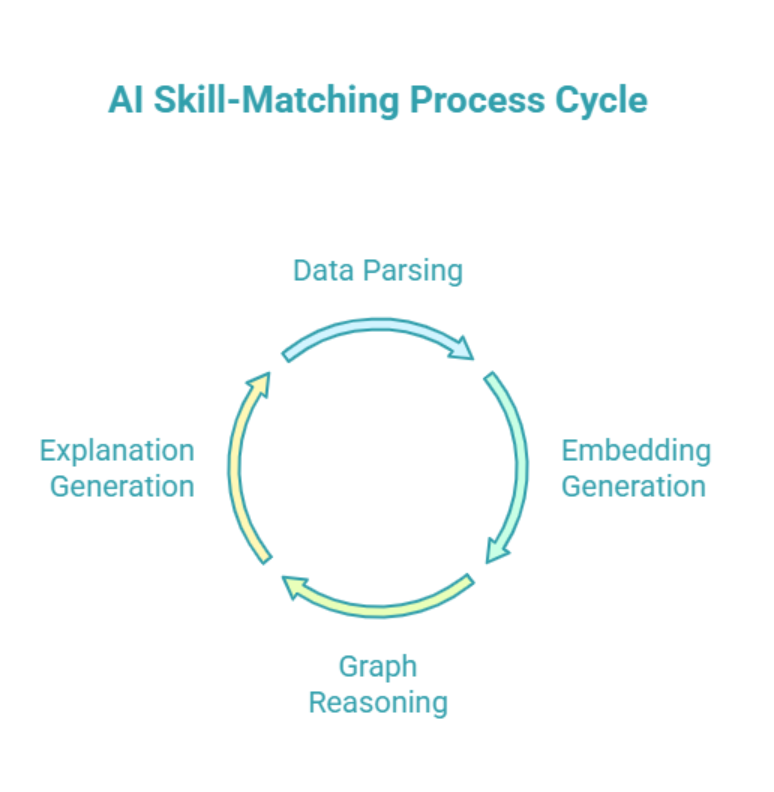

How AI Actually Identifies and Matches Skills

1) Natural language understanding. Modern models read résumés, job descriptions, and portfolios as language, not as bags of keywords. They parse verbs, objects, and context: “built CI/CD pipelines” indicates DevOps; “deployed canary releases with feature flags” points to progressive delivery; “reduced churn by cohort-based LTV analysis” signals product analytics.

2) Semantic embeddings. Skills, tools, and tasks are encoded as vectors, so related items sit close together. This is where a living skills ontology gives structure. Engineers fluent in Kafka often sit near event-driven microservices; analysts who use dbt cluster near modern data stacks; experience with Terraform lies close to infrastructure-as-code more broadly. That geometry makes adjacency measurable.

3) Hybrid taxonomies and ontologies. Static taxonomies make skills comparable across roles and regions; adaptive ontologies learn new relationships as technologies emerge. Together they become a living “skills brain” that keeps pace with the market while staying auditable for HR and compliance teams.

4) Graph reasoning. People, roles, and skills form a graph. Graph neural networks traverse that web to infer who can transition where, which combinations predict success, and how to rank candidates for potential rather than word overlap. The same machinery powers internal mobility, succession planning, and reskilling at scale.

5) Human-centered interfaces. Explanations matter. Instead of a black-box score, mature systems show evidence: the experiences that contributed most, the gaps that remain, the ramp plan that would close them, and comparable candidates. Recruiters and managers make the call with better signal and less noise.

Moving Beyond Exact Fit: Hiring for Adjacency and Potential

The brittle checklist—five tools, three frameworks, two clouds—locks out capable people and slows delivery. Teams end up waiting for unicorns while projects slip. Adjacency-aware matching fixes this. If a candidate has four of the five tools and strong fundamentals, the model highlights them, shows the missing piece, and proposes a learning path. Managers get options rather than dead ends. This is where thoughtful AI recruitment tools add real value by proposing options rather than dead ends.

This is especially valuable in platform and data work where names change but principles persist. A site reliability engineer who lives and breathes incident command, postmortems, and SLOs will adapt to a new observability vendor faster than someone who merely listed the right brand. A data engineer who understands modeling trade-offs and lineage can move between orchestration frameworks with little friction. AI turns those intuitions into quantitative, repeatable decisions.

Reading the Human Signals: Soft Skills, Writing, and Collaboration

Tech succeeds on habits as much as on hard skill. The engineer who writes clear design docs shortens review cycles. The analyst who frames trade-offs invites better product decisions. The security lead who de-escalates incidents protects sleep and revenue. These behaviours show up in artifacts. Light, relevant soft skills assessment through work samples, writing prompts, and structured rubrics is more predictive than brainteasers or vibe checks.

AI supports this by helping teams evaluate structured work samples. Short, authentic tasks—write a small service with tests, critique a flawed dashboard, draft an incident retro—produce evidence that models and humans can both read. Lightweight writing prompts reveal reasoning and clarity. When scored against rubrics, these signals are more predictive than brainteasers or vibe checks.

Caution is required with video analytics and facial inference; the risk of spurious correlation is high. Favor text, code, and task outputs over appearance and accent. Keep humans in the loop where nuance matters—especially for leadership roles and culture-setting positions.

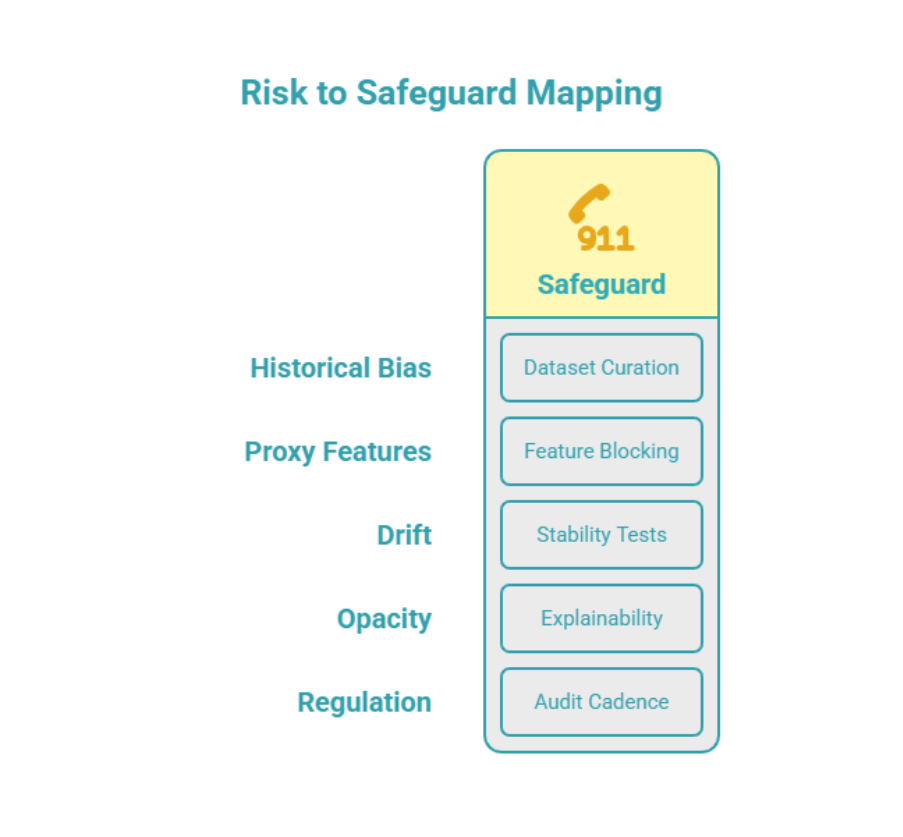

Risks, Bias, and the Governance You Must Own

AI is not a neutral referee. Train on biased outcomes, and it will learn to reproduce them. Hide the reasoning, and you will lose trust. Fail to monitor, and drift will erode quality. Treat AI bias in hiring as an operational risk.

Put guardrails in five places:

- Data curation. Minimize proxies for protected attributes; balance examples across cohorts; refresh frequently so recency does not masquerade as merit.

- Feature control. Block brittle or ethically dubious signals; test sensitivity to fields like school names and gaps; prefer evidence from tasks over self-report.

- Explainability. Ship ranked factors, not just a score. Show why a match exists and what would change the decision.

- Human checkpoints. Require human approval for adverse actions and high-impact hires; store rationales for audit.

- Bias audit cadence. Instrument adverse-impact ratios and run counterfactual tests; treat fairness like uptime with alerts, owners, and runbooks.

Business Case: Speed, Quality, Fairness, and Candidate Trust

Leaders buy outcomes. Skills intelligence delivers them in four lines of value for AI in talent acquisition.

- Speed. Time-to-slate drops when low-signal screens disappear and adjacent matches rise. Coordinators stop chasing interviews that should never have been scheduled.

- Quality. Matches improve as models weight depth, recency, and context. Ramp time shortens because onboarding plans target specific gaps.

- Fairness. With audits and feature control, adverse impact narrows compared to unstructured hiring. Documentation improves because explanations and rationales are baked in.

- Candidate trust. Clear timelines, explanations for outcomes, and pointers to learning content turn a “no” into a “not yet,” preserving brand and future pipelines.

On the ground, candidate experience is a product. Publish a candidate charter that explains where AI is used, how decisions are reviewed, and how to appeal. Send status updates that say more than “in review.” Offer micro-feedback after structured tasks. Measure candidate NPS, not just conversion. Trust compounds when people feel seen and informed.

Internal Mobility and Reskilling: Your Fastest, Cheapest Talent Pool

The shortest path to a scarce skill often runs through the people you already employ. A transparent internal talent marketplace uses the same skills graph to surface adjacencies, offer micro-learning, and route talent to gigs and rotations. Give managers incentives to graduate talent, not hoard it.

Launch an internal marketplace that lists gigs, mentorships, and short rotations alongside formal roles. Pair it with micro-learning tied to your stack. Give managers incentives to graduate talent, not hoard it. When employees can see transparent paths and acquire targeted skills, retention improves and hiring costs fall. The same AI that powers external matching becomes a mobility engine.

Adoption Playbook: What to Buy, What to Build, How to Measure

Buy the commodity plumbing. Build the parts that encode your strategy. Instrument like an SRE team. This is a practical AI adoption strategy.

Build the parts that encode your strategy: how you weight potential versus presence, which adjacencies you trust, which fundamentals you prize, and how post-hire outcomes feed back into models. Wrap those rules behind stable APIs so they survive platform swaps.

Instrument like an SRE team. Create model registries with owners, objectives, and drift monitors. Log decision explanations and human overrides. Run quarterly fairness game-days where you stress-test with synthetic profiles and red-team the pipeline.

Measure the metrics that matter: time-to-slate, time-to-productivity, ninety-day quality by role family, adverse-impact ratios, candidate NPS, internal fill rate, and model stability. Publish a one-page scorecard to executives every month.

What Changes Next: Agentic AI and Living Skills Ecosystems

The next wave is agentic AI—systems that handle multi-step work autonomously under policy and human supervision. In hiring, that means agents that draft a role from business goals, source candidates, propose assessments, schedule panels, summarize evidence, and prepare a decision memo. Humans approve, adjust, and own the outcome.

As these agents connect to learning and planning, AI workforce planning becomes continuous. The boundary between hiring and development fades. When a team’s skills map shows a risk, it proposes a reskilling sprint. When demand spikes, it taps the internal marketplace before opening an external requisition. The organization becomes a living skills ecosystem.

Role Walkthrough: Hiring a Senior Back-End Engineer, Skills-First

Define the capability graph. Start with fundamentals: concurrency, API design, database modeling, caching and queuing, testing depth, observability, security hygiene, and deployment practices. Layer in stack specifics only where they are decisive. Mark acceptable adjacencies—Django ⇄ Flask, Spring ⇄ Micronaut, PostgreSQL ⇄ MySQL—and define ramp windows.

Construct evidence. Replace trivia interviews with authentic tasks: a small service with tests, a code review of a deliberately flawed PR, and a design discussion focused on trade-offs. Score with rubrics that distinguish shallow name-dropping from grounded reasoning. Ask for a brief design doc that captures risks, rollback, and metrics.

Run the match. Feed résumé, portfolio, and task outputs into the system. Let AI propose candidates with strong adjacency and clear ramp plans. Require a human checkpoint before scheduling the final loop. Present explanations: which experiences contributed, which gaps remain, and what onboarding would close them.

Decide and learn. After hire, track ninety-day and one-eighty-day outcomes—defect escape rate, on-call reliability, code review signal, and delivery predictability. Feed those metrics back to refine weights on potential versus presence. Over time, your model becomes a mirror of what success actually looks like in your environment.

Regulation, Risk, and Communications: Operating in the Real World

Automated decision tools in hiring now sit under expanding oversight, including NYC Local Law 144 and the EU AI Act. Treat the AI bias audit as routine. Document data lineage, model objectives, and known limitations. Keep a registry of active models with owners and rollback plans. Require annual bias audits with internal quarterly checks. Track adverse-impact ratios by stage—screening, assessment, decision—and publish summaries to HR.

Communicate with candidates like adults. Provide plain notices that explain where AI is used, which signals are evaluated, and how human review works. Offer an appeal path when candidates believe material context was missed. For accessibility, provide non-video assessment alternatives. Minimize retention windows and bind data to purpose.

Internally, train managers and interviewers on how to read explanations, challenge the model when evidence conflicts with reality, and document rationales. Establish an escalation path when fairness or validity concerns arise. The combination—documentation, transparency, and training—reduces risk while increasing trust.

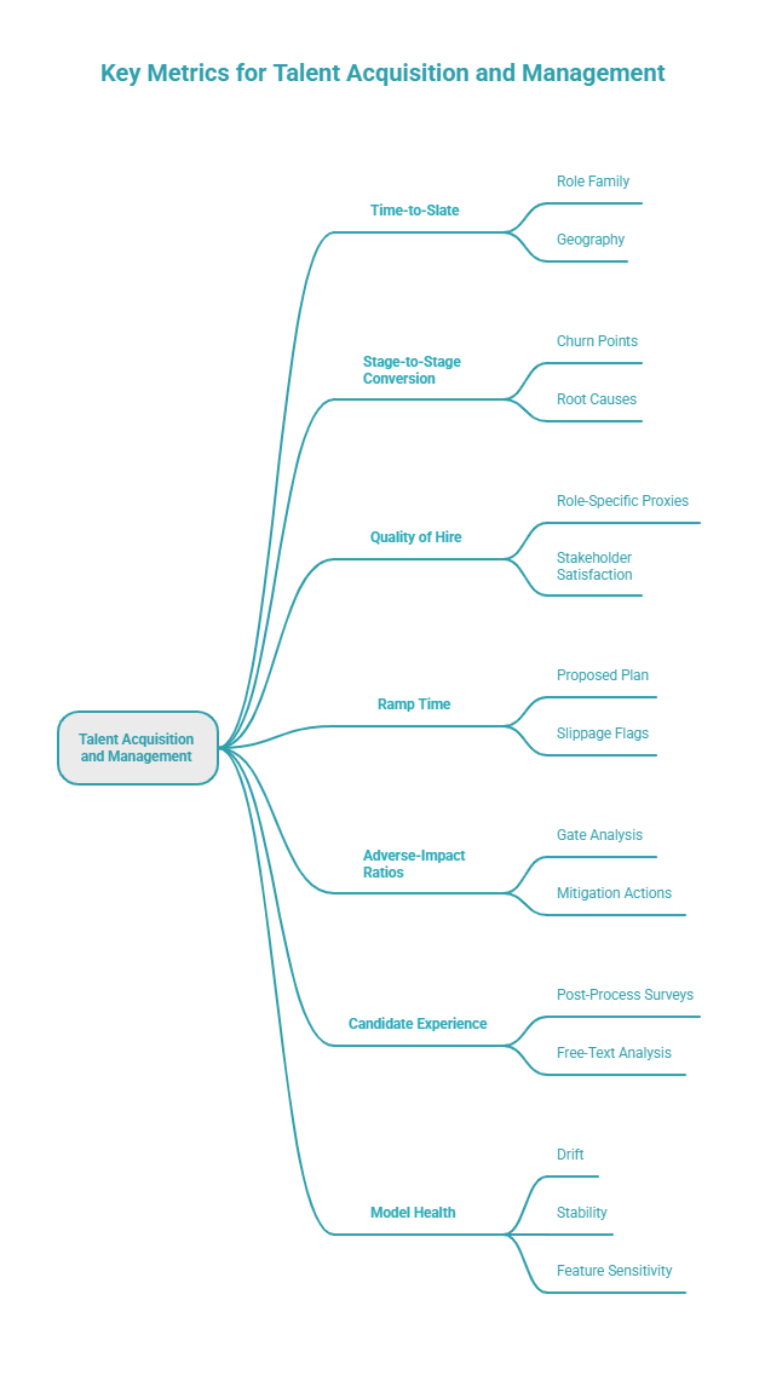

Metrics and Dashboards: Turning Hiring into a Managed System

When hiring becomes a managed system, performance improves because feedback is continuous. This is the work of AI in HR analytics: time-to-slate, conversion by stage, quality proxies, ramp time, adverse impact, candidate sentiment, and model health with clear owners and SLAs.

- Time-to-slate by role family and geography, with trends and variance.

- Stage-to-stage conversion and where candidates churn; annotate root causes.

- Quality of hire at ninety and one-eighty days using role-specific proxies—defects, incident ownership, review helpfulness, stakeholder satisfaction.

- Ramp time against the proposed plan for adjacent-fit hires; flag slippage early.

- Adverse-impact ratios at every gate and the status of mitigation actions.

- Candidate experience via post-process surveys and free-text analysis.

- Model health—drift, stability, and feature sensitivity—with clear owners and SLAs.

If adverse impact passes a red line, pause the offending stage and run a game-day to isolate causes. If ramp time slips in a role family, examine the onboarding plan and the adjacency rules that admitted those candidates. If candidate NPS drops, read the free-text, fix the communication cadence, and update expectations. Treat this like SRE for talent: alerts, runbooks, and continuous improvement.

Manager Playbook: Week One to Week Twelve

Great hires deserve great starts. In week one, align on outcomes, owners, and risks; ship a small change to exercise the toolchain. Tie learning plans to the skills graph so AI learning and development supports the first quarter on the job. In weeks two to four, pair on a meaningful feature, rotate code reviews, and run a blameless retro. Weeks five to eight focus on autonomy: the hire leads a design review, proposes metrics, and takes a light on-call shift shadowing an experienced engineer. Weeks nine to twelve consolidate mastery: complete a scoped project end-to-end, present lessons learned, and mentor the next new joiner.

FAQs

Q1: What is skills-based hiring and how does AI enable it?

Skills-based hiring focuses on demonstrable capabilities rather than pedigree. AI maps skills to outcomes, shows adjacencies, and explains why a candidate is a fit today and how quickly they can ramp.

Q2: How do AI recruitment tools identify and match candidate skills?

They use language models, embeddings, and a maintained skills ontology to extract signals from résumés, portfolios, and tasks, then rank candidates by evidence and potential.

Q3: Can AI hiring platforms help reduce bias in recruitment?

Yes, when governed. Regular AI bias audits, feature controls that block brittle proxies, and human review reduce disparate impact compared with unstructured processes.

Q4: How does AI improve internal mobility and reskilling initiatives?

A skills graph powers an internal talent marketplace that surfaces adjacencies, recommends micro-learning, and routes people to gigs and rotations that build targeted capabilities.

Q5: What metrics should organizations track to measure AI-driven hiring success?

Track time-to-slate, time-to-productivity, ninety-day quality, adverse-impact ratios, candidate NPS, internal fill rate, and model stability. Use AI in HR analytics dashboards to monitor drift and fairness.

Conclusion: Match What Matters

Hiring has always been a proxy war over signal. We used résumés and degrees because they were available, not because they were accurate. Now we can model the work itself—skills, adjacencies, learning rates—and show our reasoning as we make decisions. That is the point.

If you anchor on skills, design humane assessments, and keep humans responsible for judgment, AI becomes a force multiplier for fairness and performance. You will interview fewer people for better reasons. You will select for fundamentals that survive tool churn. You will promote mobility rather than hoard talent. You will open doors for capable people who were invisible behind credentials and keywords.

The path is practical and within reach: start with a skills language, light up parsing and embeddings, wire in a graph, ship explanations, audit for bias, and close the loop with outcomes. Do that, and you trade résumé roulette for intelligent matching—and you build a team that can deliver under real-world conditions.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.