Why this matters now

Walk into any enterprise talent meeting and you can feel the tension. Budgets are tight, headcount plans are fluid, and every team is under pressure to do more with less. Meanwhile, AI keeps marching into HR tech—parsing résumés, chatting with candidates, scheduling interviews, and nudging hiring managers to move. The promise is intoxicating: faster hiring, better matches, lower cost per hire.

The promise vs. the reality

Here’s the honest truth: not everything in recruiting should be automated, and not everything that can be automated should be. The recruitment funnel is part pipeline and part relationship. It runs on data and judgment, speed and empathy. Treat it like a factory line and you’ll ship bodies, not hires. Treat it like a black box and you’ll ship bias, not progress.

The purpose of this article

This guide draws a clear line between what AI does well today, what it does poorly, and what it cannot—nor should it—replace. Think of it as a field manual: practical, candid, and built from the ground up.

How we got here: a quick history of automation in hiring

The keyword decade: early ATS systems

Late-1990s ATS platforms were blunt instruments. They stored applications, matched keywords, and enforced process. If your résumé used different words for the same skills, the system missed you. Useful for scale, clumsy for understanding.

Then came rudimentary skills databases, slightly more context-aware matching, basic ranking, and early email automation. Recruiters finally had a single source of truth, even if that truth was rigid and literal.

The cloud and NLP era

In the 2010s, two shifts rewired the stack. First, the cloud made modern ATS accessible and integrated with the rest of the enterprise. Second, natural language processing let systems extract skills, seniority, tenure, and role context with far better accuracy. Parsing stopped being a game of exact match and started becoming pattern recognition.

The LLM and agentic turn

The current wave adds large language models and “agentic” patterns. Instead of one monolith, you orchestrate specialized AI agents: one extracts facts, one compares to role requirements, one drafts a summary, one flags risk, one recommends next steps. Retrieval-augmented generation (RAG) brings external knowledge—industry certifications, salary norms, compliance notes—into the evaluation. The work is modular, explainable, and faster than humanly possible.

What AI can automate today (and where it shines)

Sourcing: surfacing more of the right people

AI excels at pattern-finding across messy, fragmented data. Modern sourcing tools scan internal CRMs, public profiles, portfolios, code repositories, and alumni lists, then infer likely fit. They cluster skills, infer seniority from career arcs, and spot adjacency—think “payments security engineer” from a path spanning fintech, crypto, and PCI projects.

<u>Practical moves</u>

- Define 3–5 “success patterns” per role using past hires, performance signals, and stack specifics.

- Weight skills and outcomes over titles. Let the model expand synonyms and adjacent skills.

- Cap daily prospect volume and require human review for final outreach lists.

Screening: scale with structure, not shortcuts

LLM-based screeners can read hundreds of résumés in minutes, extract relevant signals, and produce structured summaries. Multi-agent flows keep the system honest: extraction is separate from evaluation; evaluation is separate from scoring; scoring is separate from recommendation. Each step is auditable.

Well-tuned systems will outperform keyword filters and reduce the false negatives that plague nontraditional candidates. Poorly tuned systems will recreate old bias at machine speed.

Practical moves -

- Start with a narrow role family. Calibrate on 200–500 labeled examples to align on what “good” looks like.

- Separate minimum qualifiers (must-have) from differentiators (nice-to-have). Teach the system to penalize neither career breaks nor nonstandard titles.

- Log every decision artifact: extracted skills, evidence sentences, and criteria mapping. Make it easy to challenge and override.

Scheduling and coordination: let robots herd calendars

Bots are great at high-friction logistics. They can propose times, juggle time zones, collect availability, send reminders, and handle rescheduling. They can also route candidates into the right interview loops based on role type and seniority.

Guardrail: keep a human alias or signature in the loop for accountability and tone. Automate the coordination, not the relationship.

Assessments: skills first, profile second

Wherever you can test actual skills, do it. Code exercises, take-home briefs, structured case prompts, and job simulations reduce reliance on pedigree. AI can generate balanced question sets, randomize variants, and auto-score objective parts while leaving subjective sections to humans with rubrics.

For roles with voice or video components, be cautious. Automated analysis of speech or facial cues is still noisy and controversial. Use it, if at all, only as an optional signal with explicit consent.

Candidate communications: clarity at scale

AI can draft consistent, empathetic updates: confirmation notes, status nudges, expectation-setting messages, and honest rejections. Candidates value knowing where they stand more than hearing nothing. Templates plus light personalization beats silence every time.

Give the system a tone guide and a do-not-say list. Always offer a human contact for escalations.

Compliance, documentation, and analytics

From EEOC log prep to requisition hygiene to interview feedback normalization, AI can keep the paper trail clean. It can summarize panel feedback, spot missing forms, flag SLA breaches, and compile dashboards that show bottlenecks and quality of hire trends. Think of it as a meticulous operations partner that never sleeps.

What AI can’t (or shouldn’t) automate

Context, intent, and judgment

Humans tell stories with gaps. A résumé is a compressed narrative: two lines for a two-year pivot; three bullets for a hard-won turnaround. AI can extract facts but struggles with intent. It will not know that a lateral move was a caregiving choice, that a two-month gap was a layoff after a merger, or that a title hides a scope far beyond its pay grade.

When stakes are high, judgment must be human. Use AI to assemble evidence; use people to weigh it.

Trust and the candidate relationship

Great recruiters do more than move stages. They explain trade-offs, read the room, and build safety so candidates tell the truth about offers, constraints, and ambitions. Over-automate and you flatten the interaction into a ticketing system. You will get faster “process,” and worse decisions.

A useful mental model: automate the mechanics, humanize the moments.

Fairness, bias, and the long tail of edge cases

Bias creeps in through data, proxies, and the features we choose to value. Historical hiring data often reflects past inequities. Résumé parsers may penalize nontraditional schooling or military experience. Voice analysis misfires on accents and speech differences. Even well-meaning systems can overcorrect or undercorrect by group.

The remedy is not magical. It is governance: pre-deployment tests, ongoing audits, explainability, and clear override paths. Anything less is theater.

Culture, leadership signals, and team dynamics

No model can infer whether a decisive, scrappy engineering leader will mesh with a highly regulated fintech culture. It cannot spot the quiet glue person who lifts a team through conflict. Those signals arrive in stories, references, and live interactions. They are messy and human on purpose.

Regulation, consent, and explainability

If a candidate asks, “Why was I rejected?”, you need an answer that maps to job-relevant criteria. That means traceable features, lawful basis for processing, and explicit disclosures for automated decisions. Some regions require opt-outs or human review on request. Build for the strictest regime you operate in; it’s good hygiene everywhere.

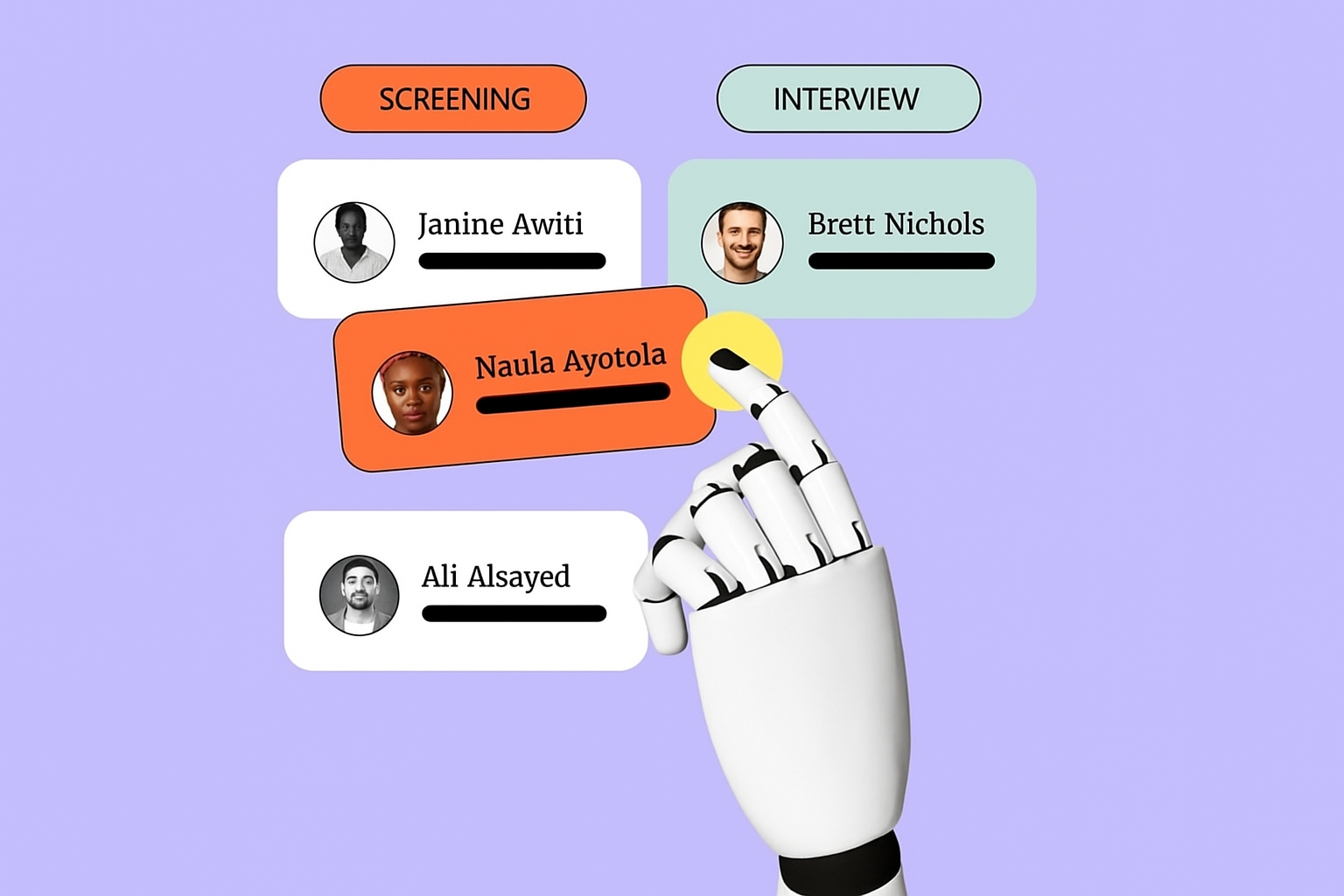

The funnel, stage by stage: what to automate, what to keep human

1) Attraction

Automate confidently

- Market intelligence on talent supply, competitor hiring velocity, and salary bands.

- Dynamic job description variants that emphasize skills and outcomes.

- A/B testing of posting titles and summaries for clarity and inclusivity.

Automate with care

- Personalization of employer brand content based on candidate segments. Keep messaging honest; avoid creepy targeting.

Keep human-led

- Role framing with the hiring team. Alignment on scope, outcomes, and success metrics.

2) Sourcing

Automate confidently

- Multi-channel search across internal/external sources with skill inference.

- De-duplication, enrichment, and diversity-aware slate composition.

Automate with care

- Automated outreach drafts. Require reviewer approval, and throttle volume.

Keep human-led

- Final prospect lists and outreach strategy. Quality over quantity.

3) Screening

Automate confidently

- Extraction of skills, tenure, industries, and key evidence from résumés.

- Rule-based disqualifiers that are job-relevant and lawful.

Automate with care

- Model-generated fit scores. Use as a starting point, never a verdict.

Keep human-led

- Edge cases, nontraditional paths, and “why now?” context checks.

4) Assessments and interviews

Automate confidently

- Logistics, reminders, structured question banks, and objective scoring for coding/tests.

Automate with care

- AI-assisted note taking and summarization. Always allow interviewers to edit and own the record.

Keep human-led

- Behavioral interviews, team panel debriefs, culture add discussions, and final hiring decisions.

5) Offers

Automate confidently

- Offer letter generation, comp benchmarks, and approvals routing.

Automate with care

- Scenario modeling for equity/cash mixes. Present ranges; humans decide trade-offs.

Keep human-led

- Negotiation, closing, and risk-reduction conversations.

6) Onboarding

Automate confidently

- Paperwork, equipment provisioning, access setup, and day-one schedules.

Automate with care

- Personalized learning paths. Calibrate with manager input.

Keep human-led

- Welcome rituals, first-week expectations, and community building.

Design principles and guardrails that actually work

Start with outcomes, not features

Automating a broken process just moves the mess faster. Begin with the end in mind: better hires, faster time-to-fill, improved candidate experience, and measurable diversity outcomes. Only then choose tools and automations that move those needles.

Human-in-the-loop by design

Treat AI as an advisor. Require humans to confirm, correct, or override at key checkpoints. Capture those overrides as feedback to retrain the system. Over time, the loop gets sharper; trust increases because people see their judgment reflected back.

Observable systems beat black boxes

Insist on evidence trails. For every recommendation, store what was extracted, how it was weighed, and which criteria triggered which score. Put that evidence next to the decision so reviewers can agree, disagree, or ask better questions. Observability is how you earn the right to automate more.

Fairness isn’t a one-time checkbox

Bias creeps. Build a cadence: pre-deployment bias and privacy reviews; monthly fairness drift checks; quarterly model refreshes; annual third-party audits. Publish a short transparency note for candidates explaining how automation is used and how to request human review.

Data minimization and consent

Collect the minimum data needed for the decision, keep it only as long as needed, and be explicit about consent—especially for audio, video, and external data enrichment. Privacy-safe defaults make your program resilient across jurisdictions.

Metrics that matter

Track beyond speed. Pair time-to-hire with quality-of-hire, pass-through rates by demographic, offer acceptance, first-year retention, and candidate NPS. If automation improves speed but erodes trust or equity, it’s not a win.

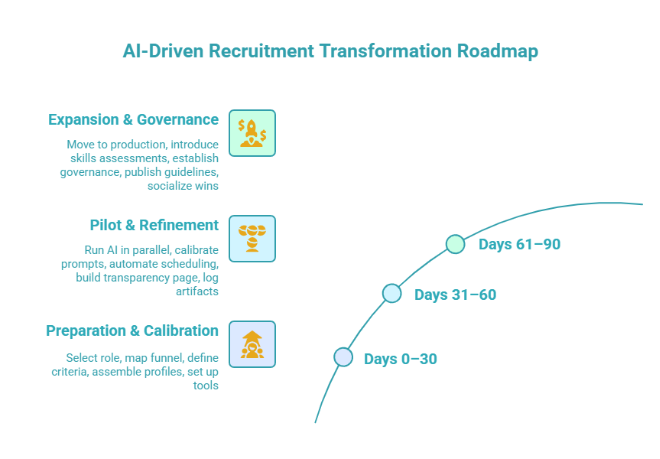

A pragmatic 90-day implementation plan

Days 0–30: prepare and calibrate

- Pick one high-volume role family with clear success signals.

- Map the current funnel: where time is lost, where candidates drop, where bias might creep.

- Define evaluation criteria with hiring managers. Separate must-haves from nice-to-haves.

- Assemble 300 labeled profiles and annotate why.

- Stand up sandbox tools for sourcing, screening, and scheduling.

Days 31–60: pilot with humans in control

- Run AI sourcing and screening in parallel with current process. Compare slates and outcomes.

- Hold weekly calibration sessions. Tune prompts and rules.

- Add scheduling automation. Keep a human alias on every message.

- Create a candidate transparency page.

- Start logging decision artifacts and building dashboards.

Days 61–90: expand and harden

- Move calibrated flows into production.

- Introduce skills-first assessments where feasible.

- Establish governance rhythms: monthly drift checks, quarterly re-training, annual audit.

- Publish internal guidelines: what to automate, what to keep human, who is accountable.

- Socialize wins and learnings. Invite feedback.

Selecting tools without getting burned

Look past the demo

Demos are designed to dazzle. Ask to run your own data through the system. Verify extraction accuracy, evidence trails, and override UX. If you cannot see why a candidate scored a certain way, pass.

Demand configuration, not customization

You want configurable components—criteria weightings, prompt templates, evidence displays—not a bespoke build you cannot maintain. The more special-case code, the less adaptable your program becomes.

Evaluate the vendor’s governance posture

Ask for privacy documentation, model update cadence, audit logs, and fairness testing methods. Ask how they handle consent and deletion requests. You are buying a process, not just a product.

Plan for coexistence

Your ATS is not going away. Favor tools that play nicely with it via APIs and webhooks. Orchestrate with middleware so you can swap components without ripping the spine out of your stack.

The near future: toward agentic hiring

We’re moving from tools that assist to systems that coordinate. In agentic hiring, multiple AI workers handle sub-tasks—draft a JD variant, source a slate, summarize evidence, propose interview loops, prepare an offer scenario—and hand off to humans for the moments that carry meaning. It’s not hands-off; it’s hands-wise.

The prize is not full automation. It’s precision: fewer wasted cycles, more inclusive slates, clearer evidence, and better conversations. When the machine handles the mechanics, people can do the profoundly human work of judgment and trust-building.

Closing takeaway

Automate the parts of recruiting that behave like operations: data collection, pattern matching at scale, logistics, documentation, and analytics. Keep human hands on the parts that behave like leadership: judgment, values, negotiation, and care. Build observable, fair systems that people can question and improve. If you do that, you will hire faster—and better—without losing your soul in the process.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.