AI Recruiting Tools: Progress, Promise, and the Path Forward

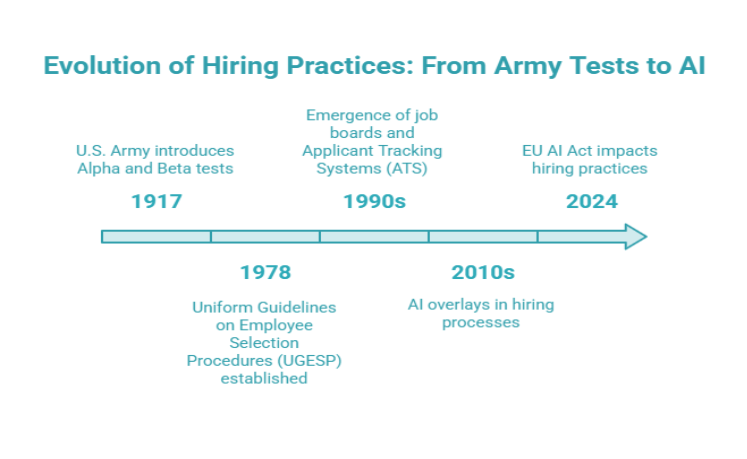

Every decade in hiring comes with its own miracle cure. In the early 1900s, it was psychometric testing — an attempt to quantify human potential. By the 1970s, regulation took the lead, with fairness and accountability written into law. By the 1990s, the internet created a flood of applicants, and the Applicant Tracking System (ATS) emerged to manage the chaos. Then came the 2010s — the decade when AI promised to finally make recruiting intelligent. Vendors touted algorithms that could assess potential, remove bias, and cut hiring time in half. The age of AI recruiting tools was born.

But peel back the glossy dashboards, and most AI recruiting tools still resemble the same logic of the ATS systems they were meant to replace. They parse résumés, extract keywords, score candidates against job descriptions, and rank them in lists. They are faster, sleeker, and more automated — but fundamentally, they’re still filters.

The problem isn’t the existence of filters. At scale, filtering is inevitable. The problem is that filters have become the ceiling of ambition. Instead of redefining how talent is measured, most systems simply automate old proxies: degrees, job titles, and keywords. The result is predictable — capable candidates are overlooked, bias gets replicated at scale, and compliance risk grows just as governments tighten regulation on AI in the hiring process.

This piece takes the long view: a century of hiring evolution from psychometrics to automation, the growing evidence that filters miss talent, the regulatory landscape redefining what’s acceptable, and the higher standard that business leaders should demand. Because if recruiting is truly about finding people who can do the work, tools built only to sort résumés will never be enough.

Part I: The Long Road to Filters

Early Psychology Meets Hiring

The story begins in 1917, when the U.S. Army introduced the Alpha and Beta intelligence tests to screen more than 1.7 million World War I recruits. These assessments normalized the idea that human ability could be quantified at scale. Civilian employers adopted the template, and by the mid-20th century, personnel psychology had become a discipline of its own—mixing aptitude tests, cognitive measures, and structured evaluations to bring science into hiring.

The lesson that endured wasn’t about nuance; it was about throughput. If you could evaluate thousands quickly, precision became secondary. That efficiency-first mindset evolved into the modern AI recruitment challenges we face today: systems engineered to handle scale rather than understand capability.

Law Codifies Accountability

By the 1970s, lawsuits and civil-rights movements forced regulators to draw new boundaries. The Uniform Guidelines on Employee Selection Procedures (UGESP, 1978) established the ground rules:

- Employers had to validate every selection tool they used.

- They were required to track adverse impact under the four-fifths rule.

- They had to document methods and justify outcomes.

For the first time, U.S. law treated selection tools as high-stakes decision systems. If a mechanism influenced employment outcomes, it had to prove relevance and fairness. That principle still anchors today’s oversight of AI recruiting tools, linking compliance and transparency directly to their design.

Job Boards and the ATS Flood

The 1990s brought the internet—and with it, a tsunami of résumés. Job boards like Monster (1994) and CareerBuilder (1995) opened global access. Recruiters were soon overwhelmed by volume.

The Applicant Tracking System (ATS) became the default solution. It wasn’t built to predict performance; it was built to organize chaos. Its core was efficiency: intake résumés, parse text, match keywords to job descriptions, rank by scores, and route to recruiters. This architecture entrenched a logic of filtering—the belief that reducing noise equaled quality. It set the template for today’s AI recruitment challenges, where speed is often mistaken for science.

AI Overlays in the 2010s

When AI hype accelerated in the 2010s, vendors layered machine learning and natural-language processing on top of ATS workflows. Résumé scorers, semantic search, chatbots, and automated rankers promised sophistication. But beneath the surface, the mechanism stayed the same: parse, match, score, rank.

The result was not a revolution but a refinement. The first generation of AI recruiting tools simply automated the old system faster, perpetuating the very AI recruitment challenges it claimed to solve—tools designed to filter, not to understand.

Part II: What Fancy Filters Really Do

Most “AI recruiting” systems today can be reduced to a familiar four-step loop:

- Intake: Résumés are ingested into a system.

- Parse: Fields and keywords are extracted.

- Match: Candidate data is compared to job descriptions.

- Rank: Candidates are scored and shortlisted.

It looks intelligent, but the logic hasn’t evolved much beyond Boolean search. Machine learning may have modernized the interface, but not the thinking. Beneath the automation lies a persistent bias in AI recruitment — one that reflects data patterns rather than human potential.

Harvard Business School and Accenture estimate that 75% of U.S. employers now rely on automated screening, and in Fortune 500 companies, it’s nearly universal. Yet ubiquity doesn’t equal accuracy. These systems move résumés faster, but they don’t necessarily identify better hires.

The problem runs deeper: AI recruiting tools inherit the same flaws as the systems they replaced. They replicate bias, reinforce rigid definitions of “qualified,” and mask exclusion beneath a veneer of efficiency.

When bias is coded into automation, it scales silently. Candidates never see why they were rejected; recruiters never know who was excluded. And that’s the paradox — tools built to remove bias have instead perfected its invisibility.

Part III: The Business Problem Filters Create

The Hidden Worker Crisis

Harvard Business School and Accenture estimate that more than 27 million U.S. workers are invisible to automated systems. These “hidden workers” include caregivers with résumé gaps, veterans with adjacent skills, and immigrants with unconventional credentials.

The exclusion isn’t deliberate — it’s structural. Filters reward perfect keyword alignment instead of demonstrated ability. That flaw, now scaled by AI hiring bias, prevents qualified candidates from ever reaching a recruiter’s desk. Automation doesn’t just miss talent; it systematically erases it.

Lost Diversity, Slower Hiring

The costs are tangible.

- Reduced diversity. Filters replicate conformity. Homogenous teams limit innovation.

- Longer time-to-fill. Narrowed funnels lead to recurring vacancies.

- Candidate distrust. Applicants see the process as a black box and disengage.

What began as efficiency has become an equilibrium of exclusion. The more organizations rely on algorithmic filtering, the more they reinforce AI hiring bias—not as prejudice, but as process.

Until hiring systems shift from exclusion to evaluation, from keywords to capability, companies will continue to pay for tools that optimize speed instead of outcomes. And every unmeasured skill left behind is a competitive advantage someone else will find first.

Part IV: What Predicts Performance (and What Doesn’t)

A Century of Meta-Analysis

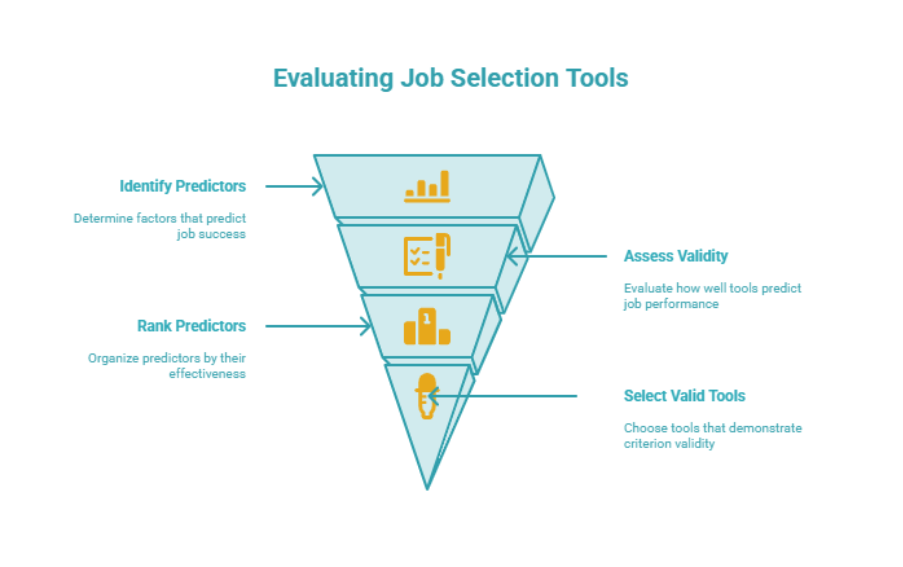

Decades of research make one thing clear: résumé filters are weak predictors of job success.

- Schmidt & Hunter (1998): General mental ability, work samples, structured interviews, and integrity tests are top predictors. Résumé-based proxies aren’t on the list.

- Schmidt, Oh, & Shaffer (2016 update): Validity hierarchy reaffirmed. Work samples and structured interviews still strong. Credentials weak.

- Roth et al. (2005): Work samples provide robust predictive validity.

The implication is clear. Unless a system can demonstrate criterion validity — a measurable correlation between its scores and actual job performance — it isn’t a selection instrument. It’s a sorting tool.

And that distinction matters. Many AI recruiting tools advertise precision, but without validated predictors, their recommendations are just scaled assumptions. They automate pattern recognition, not performance forecasting.

The evidence hasn’t changed in a century: what predicts success is work samples, structured interviews, and cognitive ability. What doesn’t are proxies — the same proxies that filters, both manual and automated, continue to prize.

Part V: When “AI Scoring” Becomes Pseudoscience

The Amazon Case (2018)

Amazon trained an internal résumé scorer to mirror past hiring decisions. Because most prior hires were male, the system penalized résumés with indicators of women’s experience. The project was abandoned after exposing how easily bias can be baked into AI recruiting tools.

HireVue’s Facial Analysis (2021)

HireVue claimed its video-interview AI could read employability from facial cues. Researchers disputed the science; regulators raised privacy concerns. Under pressure, the company dropped facial analysis entirely.

Text-Based Personality Prediction (2022)

Audits of tools such as Humantic AI and Crystal found trivial résumé edits could change outcomes. The models were inconsistent and invalid.

Across these cases, the pattern is clear: layering “AI scoring” on top of filters doesn’t create insight—it multiplies error. Without proof of validity, automation turns selection into pseudoscience. Until vendors demonstrate that algorithms measure real capability, AI recruiting tools remain faster ways to make the same old mistakes.

Part VI: Candidate and Recruiter Realities

Candidate Alienation

For many applicants, automation has made hiring feel impersonal. Rejections arrive without feedback, and video interviews often misread non-native accents or speech disabilities. Studies show transcription errors as high as 22 percent.

These flaws highlight the blind spots in AI in recruitment ethics. Technology that overlooks accessibility doesn’t remove bias—it scales it. Candidates begin to doubt both outcomes and fairness.

Recruiter Skepticism

Recruiters face their own fatigue. Parsing errors distort résumés, strong candidates disappear, and “shortlists” need manual correction. Many AI recruiting tools add rework instead of removing it.

Ethical recruitment depends on trust. Until systems balance fairness with accuracy, AI in recruitment ethics will remain unresolved.

Part VII: Regulatory and Legal Reality

UGESP (1978)

Baseline law requiring validation, adverse impact analysis, and documentation. Still applicable today.

EEOC (2023)

Clarified that employers are liable for disparate impact from algorithmic tools. Title VII applies. The “vendor did it” defense doesn’t hold.

NYC Local Law 144 (2023)

Requires annual bias audits, public disclosure of audit results, and candidate notices before use of automated tools.

EU AI Act (2024)

Classifies recruitment AI as “high-risk.” Requires:

- Documented risk management.

- Data governance.

- Human oversight.

- Post-market monitoring.

- Transparency obligations.

NIST AI RMF (2023)

Provides a best-practice governance model: Map → Measure → Manage → Govern.

For enterprise leaders, this means compliance isn’t optional. It’s survival.

Part VIII: The Bias Taxonomy

Bias in AI hiring is layered, not singular.

- Demographic Bias: Tools favor white-associated names 85% of the time. GPT-4 has penalized disability-related résumé content.

- Structural Bias: Résumé gaps or unconventional titles penalize candidates disproportionately.

- Algorithmic Echo Bias: LLM-based hiring tools favor résumés generated by similar models, giving them a 23–60% shortlist advantage.

- Intersectional Bias: Race, gender, socioeconomic status, and origin combine, amplifying disadvantage.

- Governance Gap

Fairness isn’t static. Preventing bias demands continuous audits, transparent documentation, and human oversight. Without them, AI recruiting tools risk automating inequality instead of reducing it.

Part IX: What “More” Looks Like

Skills-First Hiring

Define 6–8 minimum skills per role. Flip from filter-out to filter-in.

Validated Assessments

Use work samples, structured interviews, and job-relevant tests. Document criterion validity.

Continuous Bias Auditing

Run audits pre-deployment, during rollout, and post-launch. Treat it as continuous, not one-and-done.

Transparency and Oversight

Require model cards, rank explanations, data lineage, and recruiter override logs.

Human-AI Hybrids

AI handles scale; humans handle nuance. Together, they can produce balance.

The next phase of AI in talent acquisition values explainability over opacity. Systems that combine automation with ethical design will define the future of recruiting.

(Read more → What the Future of Talent Acquisition Should Look Like)

Part X: The Buyer’s Playbook

When evaluating tools, ask:

- Validation: Can the vendor prove criterion validity?

- Compliance: Do they align with UGESP, EEOC, NYC AI hiring law, and EU AI Act.

- Bias Auditing: How frequent, transparent, and actionable are their audits?

- Transparency: Can they explain why Candidate A ranked above Candidate B?

- Candidate Experience: Does the tool reduce alienation or amplify it?

Procurement now means governance. Choose vendors who can demonstrate performance validity, legal alignment, and ethical design. The best AI recruiting tools don’t just automate hiring—they make it accountable.

Part XI: Implementation Roadmap

A structured approach ensures AI strengthens, not replaces, human judgment. Here’s how to embed AI recruiting tools responsibly:

- Baseline & Replace Proxies: Remove degree gates. Replace with skills.

- Add Validated Assessments: Calibrate structured interviews, work samples.

- Instrument Bias Measurement: Monitor selection rates and score parity.

- Integrate Explainability: Give recruiters and candidates meaningful feedback.

- Govern as a Product: Retrain models, archive versions, run audits.

Part XII: Myths vs. Realities

- Myth: AI removes bias.

- Reality: AI mirrors its data and design.

- Myth: Faster shortlists = better hires.

- Reality: Speed is throughput, not validity.

- Myth: A degree proves capability.

- Reality: Skills evidence predicts better.

The next evolution of AI recruiting tools isn’t about removing people—it’s about empowering them to make better, fairer, faster decisions.

(Read more → Recruitment Intelligence: The Missing Layer in Talent Acquisition)

FAQs

Q1: What are AI recruiting tools and how do they work?

They automate parts of the hiring process—sourcing, screening, and ranking candidates using algorithms trained on historical data. The goal is efficiency, but human validation remains essential.

Q2: How to audit AI recruiting tools for fairness?

Conduct bias audits before deployment and annually thereafter. Follow frameworks from the NYC AI hiring law, EEOC, and EU AI Act for transparency and data governance.

Q3: How can companies ensure recruitment AI is unbiased?

Use diverse datasets, monitor selection rates, and document model updates. Continuous audits and recruiter feedback loops reduce bias.

Q4: How does AI improve candidate selection?

AI identifies skills patterns across résumés, reducing manual screening. When designed responsibly, it enhances precision without introducing AI hiring bias.

Q5: How to integrate AI recruiting tools with ATS systems?

Choose platforms with open APIs. Enable two-way sync for feedback and candidate data to preserve explainability and compliance.

Conclusion: Beyond the Filter

AI was supposed to eliminate inefficiency and bias. Instead, most AI recruiting tools became smarter filters—fast, scalable, but not necessarily fair.

Filters don’t predict performance. They exclude hidden workers. They replicate bias. They invite compliance risk. They erode trust.

The alternative is clear. Expect more:

- Skills-first requisitions.

- Validated assessments.

- Continuous audits.

- Transparent, auditable models.

- Human oversight.

Recruiting has always been about people. That won’t change. What must change is our tolerance for tools that optimize speed over science. The future of your workforce deserves more than filters. It deserves foresight.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.