Introduction: The Paradox of Progress

By 2025, hiring should have become a science.

We have AI recruiters that parse resumes faster than humans can blink, sentiment-aware video platforms that claim to read facial micro-expressions, and entire ecosystems of analytics promising to predict cultural fit.

And yet, ask any talent leader to explain why a recent hire failed, and the answer usually starts with a pause.

We still guess.

Only now, our guesses are dressed up in dashboards.

That gap between what we could know and what we actually know is the intelligence gap in recruitment. It is the chasm between the data-rich systems we have built and the shallow understanding they often produce. It is why time-to-fill keeps creeping upward, why bias audits keep exposing blind spots, and why recruiters describe the process as faster, not smarter.

This disconnect highlights a deeper limitation in modern recruitment intelligence. Organizations collect enormous volumes of hiring data, but rarely convert it into learning.

This is not a story of bad intent or broken technology. It is a systems problem. A mismatch between information, interpretation, and accountability. And it is why the next evolution of recruitment intelligence will not come from more AI, but from designing systems that finally learn.

1. The Long Arc of Hiring “Intelligence”

A century ago, the dream of evidence-based hiring began with optimism. Psychologists in the early 1900s believed they could quantify human potential. The Binet–Simon scales and World War I’s Army Alpha tests ushered in the era of psychometrics.

By the 1950s, companies like AT&T built assessment centers that simulated real managerial work. In-basket exercises and group tasks offered a glimpse of future performance. These were not perfect, but they were tests with a theory.

The 1980s brought structured interviews. Standardized questions, anchored scoring, and behavioral evidence. The science was clear. Structure beat intuition.

Then came the 1990s, the ATS boom, and the keyword economy.

Digitization replaced notebooks with databases but quietly reintroduced noise. The new logic was simple. Find candidates who say the right words.

Fast-forward to 2025, and this lineage is wrapped in machine learning. Yet despite predictive algorithms, bias audits, and psychometric APIs, most organizations still do not measure whether their systems actually work.

Why? Because the inputs are noisy, the outcomes are vague, and the feedback loops are nonexistent. What is missing is not tooling, but effective AI governance that links hiring systems to accountability.

2. What Predicts Performance and What We Ignore

The science of prediction has always known more than HR practice applies.

Decades of meta-analysis, from Schmidt and Hunter’s 1998 synthesis to Sackett et al.’s 2022 recalibrations, tell a consistent story.

Cognitive ability combined with work-sample or structured interview performance are the strongest predictors of job success.

Years of experience, education pedigree, and gut feel are among the weakest.

Yet organizations cling to weaker signals because they are familiar, fast, and legally safe.

Recent re-analyses lowered many old validity estimates by 0.1 to 0.2 points. Still strong, but humbling. Even the best predictors explain less than half of performance variance. In plain terms, hiring will always involve uncertainty, but the methods chosen can either reduce or amplify it.

This gap between evidence and practice remains one of the core failures of modern hiring analytics.

3. Where the Signal Dies: The Early Funnel

If you want to find the intelligence gap, start at the top of the funnel.

In 2024, UK graduate roles averaged 140 applications per opening. This represented a 59 percent year-on-year increase driven by GenAI-generated resumes. Recruiters drown in sameness. The first casualty of overload is discernment.

Much of this breakdown occurs during AI candidate screening, where systems built for scale filter out nuance.

Applicant tracking systems designed to manage volume exclude qualified candidates who do not match rigid criteria or formatting. Harvard Business School’s Hidden Workers study found that millions of employable people, including caregivers, veterans, and neurodivergent professionals, disappear from consideration because their data does not fit.

Even newer parsing tools misread skill context. A candidate who lists data storytelling under projects may be ignored for not explicitly typing data visualization.

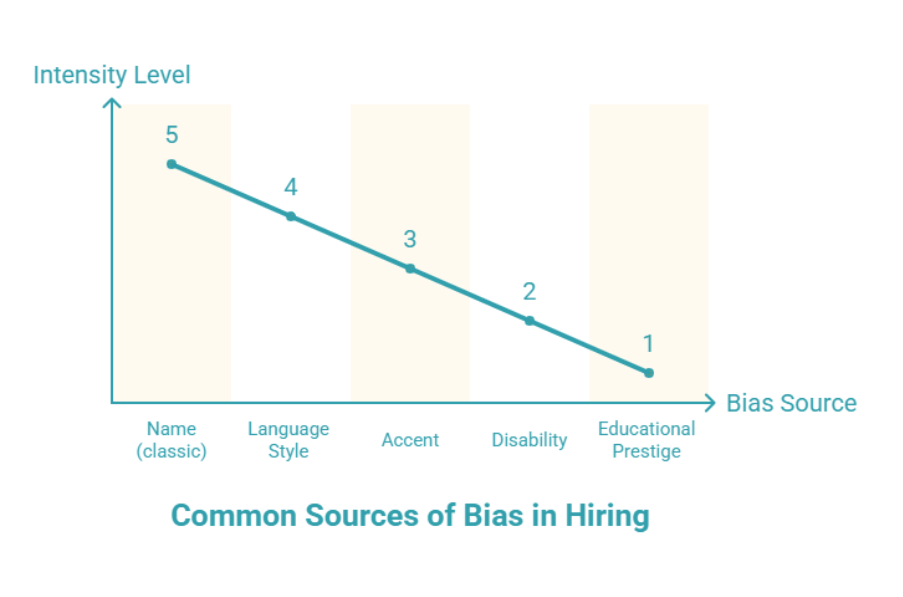

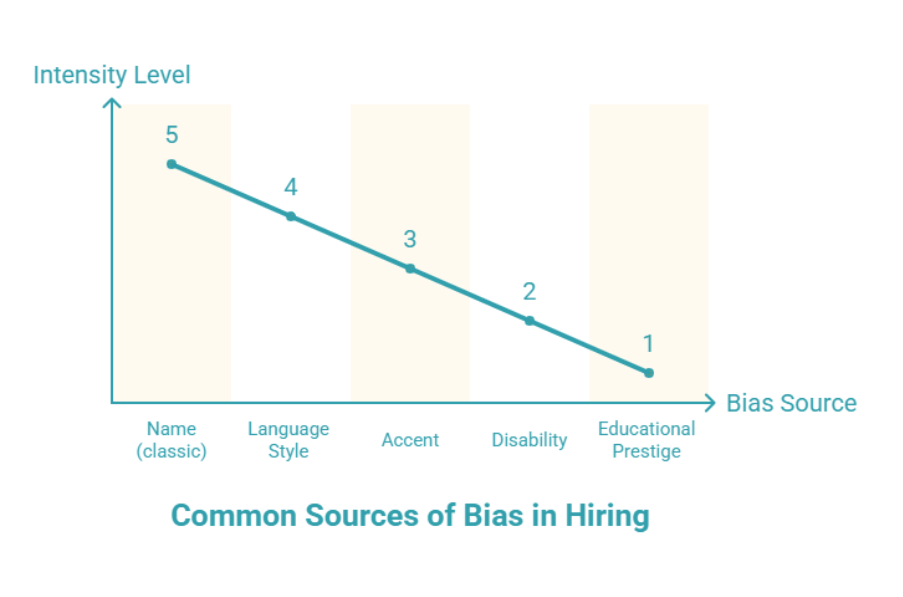

Add to this linguistic bias. A 2025 study titled Invisible Filters found that when AI evaluated anonymized interview transcripts, Indian speakers scored lower than UK speakers solely due to sentence structure and lexical diversity.

Intelligence, it seems, still depends on where the model was trained.

4. Bias and Blind Spots in the Machine Age

Bias has outlived every technological upgrade.

The famous 2004 résumé experiment showing Emily and Greg receiving more callbacks than Lakisha and Jamal still echoes in algorithmic form. Today, bias hides in feature weights, not names.

Research from 2025 using counterfactual analysis found score changes of up to 20 percent when race, gender, or accent were swapped in otherwise identical profiles. That is not randomness. It is systemic skew.

Even fairness metrics can deceive. Disparate impact ratios may look acceptable while subtler forms like linguistic or disability bias remain invisible.

Without continuous AI bias audits, hiring bias does not disappear. It compounds.

Regulators have taken notice. The EU AI Act classifies employment AI as high-risk. The US EEOC treats AI as a selection procedure under Title VII. Intelligence without accountability is not intelligence at all.

5. The Human Side of the Gap: Trust and Perception

Even when algorithms work, humans do not always trust them.

A 2025 Gartner survey found only 26 percent of job seekers trust AI to evaluate them fairly. Other studies show AI-enabled interviews reduce application intent in low-tech industries where candidates expect human interaction.

Candidates describe AI hiring as cold, opaque, or one-way.

That perception matters. When people distrust the process, they game it by flooding the funnel with AI-written applications or avoid it entirely. Both reactions degrade data quality.

The intelligence gap is not just technical. It is emotional. Trust underpins inclusive hiring practices and the accuracy of recruitment intelligence itself.

6. Cognitive Load and Decision Fatigue

Recruiters make hundreds of micro-decisions daily. Shortlist or skip. Advance or reject. Flag or archive. Under sustained load, even trained professionals revert to heuristics.

A 2025 review of decision fatigue research showed accuracy drops significantly when evaluators make repetitive judgments without rest. In hiring, that means more false negatives, fewer unconventional candidates, and greater reliance on default cues.

Automation was meant to relieve this burden, but often shifts it. Recruiters must interpret algorithmic outputs they did not design.

Ignoring these limits weakens overall workforce intelligence by distorting judgment.

7. The Forgotten Variables: Neurodiversity, Disability, and Context

The intelligence gap is not evenly distributed.

Neurodivergent professionals are often filtered out by design. Unstructured video interviews penalize eye contact. Personality models are trained on neurotypical data. Referral systems privilege social fluency over skill.

A 2025 Journal of Autism and Developmental Disorders study showed that when interviewers received neurodiversity training and candidates disclosed diagnoses, ratings became fairer and outcomes improved. Fairness is teachable.

People with disabilities face similar risks. Speech recognition errors, inaccessible interfaces, and lack of disclosure options skew assessments.

Designing for inclusion is not just ethical. It is data hygiene. This is where data-driven recruitment either succeeds or quietly fails.

8. The Mirage of “AI Intelligence”

The hiring industry’s new gospel claims that large language models understand talent.

But general-purpose models are trained on internet text, not hiring outcomes. A 2025 benchmarking study comparing general models with a domain-specific matching model found the specialized system outperformed on both accuracy and fairness.

Context beats compute.

This exposes the limits of current AI decision intelligence when predictive power is disconnected from outcomes and interpretability.

9. Quantifying the Cost of Guessing

The cost of mis-hire is often quoted as folklore, but the real loss is broader.

Every false negative extends time-to-fill. Every false positive erodes morale, training budgets, and employer brand trust.

In 2024, median US time-to-fill hovered between 41 and 44 days. That delay is not inefficiency. It is uncertainty.

The financial and operational impact of weak recruitment analytics surfaces long before performance reviews.

10. Drift: The Quiet Decay of Intelligence

Even well-designed systems degrade.

Language evolves. Labor markets shift. Models trained on last year’s data misread this year’s candidates. Accuracy may remain stable while subgroup fairness erodes.

Researchers call this bias drift.

Without continuous monitoring of impact ratios, error rates, and subgroup outcomes, every intelligent system eventually becomes dumb. This is why AI governance must be ongoing.

11. Blueprint for Closing the Gap

Step one. Define what you are actually predicting.

Step two. Replace low-signal inputs.

Step three. Combine human judgment with mechanical scoring.

Step four. Audit fairness like finance.

Step five. Design for inclusion and trust.

Step six. Calibrate continuously.

This is recruitment intelligence as a learning system, not a reporting layer.

12. Beyond Compliance: What “Intelligent Hiring” Really Means

Hiring intelligence is not about automating recruiters away. It is about elevating the quality of human judgment.

A truly intelligent recruitment system and a mature form of recruitment intelligence would do four things consistently.

Preserve signal by extracting meaningful predictors of performance rather than collecting more data.

Reduce noise by eliminating irrelevant variance introduced by bias, fatigue, or poor assessment design.

Learn over time by closing the loop between hiring decisions and downstream outcomes such as performance, retention, and progression.

Earn trust by remaining explainable, fair, and transparent to candidates and hiring teams.

This is not a futuristic vision. It is a design problem, and it is solvable with the tools organizations already have if they treat recruitment intelligence as a learning system rather than a compliance exercise.

13. What We Can Learn from the “Hidden Workers”

One of the most powerful intelligence upgrades may come not from new technology, but from revisiting who is systematically excluded.

The Harvard–Accenture research on Hidden Workers showed that when companies loosen rigid degree requirements and ATS heuristics, they do more than expand the talent pool. Performance improves. Retention improves. Loyalty improves.

Many hiring systems are optimized for familiarity, not effectiveness.

The same lesson applies to neurodivergent candidates, people with disabilities, career switchers, and non-native speakers. These groups are often filtered out not because of lack of skill, but because their profiles do not match algorithmic expectations.

Inclusive hiring practices are not only about fairness. They are about calibration. Inclusion improves the accuracy of recruitment intelligence by correcting distorted inputs.

14. Human–AI Collaboration Done Right

Some of the most promising hiring results come from deliberate human–AI collaboration rather than full automation.

In these models, AI creates structure through consistent questions and standardized scoring, while humans provide interpretation and context.

Early pilots show AI-supported interviews reduce inconsistency and sentiment bias, while human reviewers add nuance. Some organizations even allow candidates to explain or contest AI-flagged results.

These processes are slower, but more legitimate.

Fairness and intelligence are not trade-offs. They reinforce each other.

15. The Future We Deserve: Augmented Intelligence

Hiring systems are moving toward mapping skill adjacencies, tracking long-term performance, and adapting to changing labor markets.

But intelligence depends on feedback.

Without feedback loops that measure outcomes, audit bias, and recalibrate for drift, even advanced tools become static automation.

The organizations that succeed will not have the most sophisticated tools. They will treat hiring as a living experiment. Transparent, validated, and continually learning.

That is the future of workforce intelligence worth building.

Conclusion: From Guessing to Knowing

The real intelligence gap in recruitment is not a lack of technology. It is the absence of truth loops.

When organizations treat recruitment intelligence as something that learns by measuring outcomes, auditing fairness, and adapting to context, hiring stops being a guessing game.

That is when intelligence becomes real.

FAQs

What does recruitment intelligence mean?

Recruitment intelligence is the use of data, evidence, and feedback loops to make hiring decisions that predict performance and fairness.

Why does recruitment intelligence still fail in 2025?

Because most systems track activity rather than outcomes and lack continuous bias and accuracy monitoring.

Why do AI-driven hiring tools still produce bias?

They rely on historical data and often lack regular AI bias audits and contextual validation.

How can organizations close the intelligence gap in recruitment?

By using structured assessments, measuring outcomes, auditing fairness, and combining human judgment with data.

What role does human–AI collaboration play in recruitment intelligence?

It improves accuracy by pairing consistent data processing with contextual, ethical human oversight.

Stop tracking hiring.

Start running it

We’re onboarding early teams ready to experience AI recruiting that’s faster, smarter, and easier to manage. No fees. No commitments. Just real usage and real feedback.